Privacy-Preserving Training-as-a-Service (PTaaS): A Novel Service Computing Paradigm that Provides Privacy-Friendly and Customized Machine Learning Model Training for End Devices

On-device intelligence (ODI) is an emerging technology that combines mobile computing and AI, enabling real-time, customized services without network reliance. ODI holds promise in the Internet of Everything era for applications like medical diagnosis and AI-enhanced motion tracking. Despite ODI’s potential, challenges arise from decentralized user data and privacy concerns.

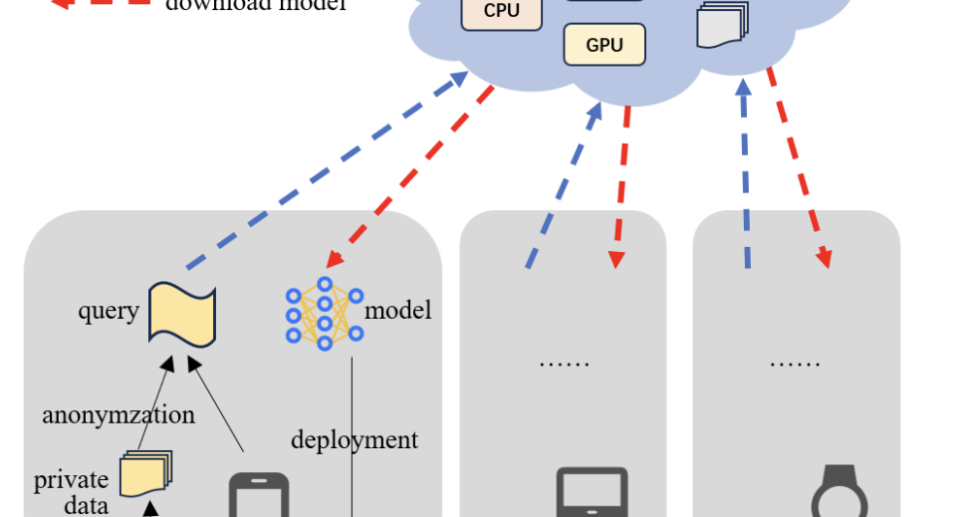

Some researchers have proposed methods balancing AI training needs with device limitations to optimize ODI’s potential. Cloud-based paradigms entail uploading data for centralized training but raise privacy concerns as devices share raw data with the cloud. Federated learning (FL) enables collaborative model training without data leaving devices yet faces challenges with intermittent connectivity. Transfer learning (TL) trains base models in the cloud and fine-tunes them on devices, but this process demands substantial device resources. While FL and TL ensure model performance and privacy, they grapple with connectivity and computation efficiency hurdles. Existing paradigms struggle to balance privacy and performance constraints.

The researchers from IEEE introduce Privacy-Preserving Training-as-a-Service (PTaaS), a robust paradigm offering privacy-friendly AI model training for end devices. PTaaS delegates core training to remote servers, generating customized on-device models from anonymous queries to uphold data privacy and alleviate device computation burden. The researchers delve into PTaaS’s definition, objectives, design principles, and supporting technologies. An architectural scheme is outlined, accompanied by unresolved challenges, paving the way for future PTaaS research.

The PTaaS hierarchy comprises five layers: infrastructure, data, algorithm, service, and application. Infrastructure provides physical resources, while the data layer manages remote data. The algorithm layer implements training algorithms, integrating transfer learning. The service layer offers an API and manages tasks, while the application layer serves as the user interface, facilitating model training queries and real-time monitoring. This hierarchical structure enables standardized design, independent evolution, and adaptation to technologies and user needs for PTaaS platforms.

PTaaS offers several advantages:

- Privacy preservation: Devices only share anonymous local data, ensuring user privacy without disclosing sensitive information to remote servers.

- Centralized training: Utilizing powerful cloud or edge servers for model training improves performance based on device-specific queries, reducing end-side computation and energy consumption.

- Simplicity and flexibility: PTaaS simplifies user operations by migrating model training to the cloud, allowing devices to request model updates as needed and adapt to changing application scenarios.

- Cost fairness and profit potential: Service costs are based on consumed resources, ensuring fairness and motivating device participation. This pricing model also enables reasonable profits for service providers, promoting PTaaS adoption.

In conclusion, This paper introduces Privacy-Preserving Training-as-a-Service (PTaaS) as an effective paradigm for on-device intelligence (ODI). PTaaS addresses challenges in on-device model training by outsourcing to cloud or edge providers, sharing only anonymous queries with remote servers. It facilitates high-performance, customized on-device AI models, ensuring data privacy and mitigating end-device constraints. Future research focuses on enhancing privacy mechanisms, optimizing cloud-edge resource management, improving model training, and establishing standard specifications for sustainable PTaaS development.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit