Researchers at Stanford University Explore Direct Preference Optimization (DPO): A New Frontier in Machine Learning and Human Feedback

Exploring the synergy between reinforcement learning (RL) and large language models (LLMs) reveals a vibrant area of computational linguistics. These models, primarily enhanced through human feedback, demonstrate remarkable ability in understanding and generating human-like text, yet they continuously evolve to capture more nuanced human preferences. The main challenge in this changing field is to ensure that LLMs accurately interpret and generate responses that align with nuanced human intents. Traditional methods often need help with the complexity and subtlety required in such tasks, necessitating advancements that can effectively bridge the gap between human expectations and machine output.

Existing research in language model training encompasses frameworks such as Reinforcement Learning from Human Feedback (RLHF), utilizing methods like Proximal Policy Optimization (PPO) for aligning LLMs with human intent. Innovations extend to the use of Monte Carlo Tree Search (MCTS) and integration of diffusion models for text generation, enhancing the quality and adaptability of model responses. This progression in LLM training leverages dynamic and context-sensitive approaches, refining how machines comprehend and generate language aligned with human feedback.

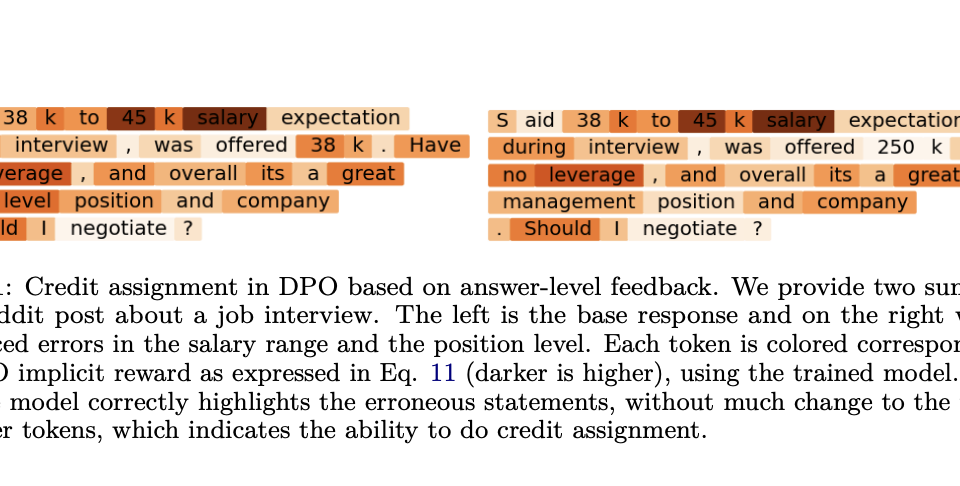

Stanford researchers have introduced Direct Preference Optimization (DPO), a streamlined method for LLMs. DPO simplifies the RL by integrating reward functions directly within policy outputs, eliminating the need for separate reward learning. This token-level Markov Decision Process (MDP) approach enables finer control over the model’s language generation capabilities, distinguishing it from traditional methods that often require more complex and computationally expensive procedures.

In applying DPO, the study utilized the Reddit TL;DR summarization dataset to assess the approach’s practical efficacy. Training and evaluation involved precision-enhancing techniques such as beam search and MCTS, specifically tailored to optimize each decision point within the model’s output. These methods facilitated a detailed and immediate feedback application directly into the policy learning process, focusing on improving the textual output relevance and alignment with human preferences efficiently and effectively. This structured application showcases DPO’s capability to refine language model responses in real-time interaction scenarios.

The implementation of DPO demonstrated measurable improvements in model performance, with notable results highlighted in the study. When employing beam search techniques within the DPO framework, the model achieved a win rate improvement ranging from 10-15% over the base policy on 256 held-out test prompts from the Reddit TL;DR dataset, as evaluated by GPT-4. This quantitative data showcases DPO’s effectiveness in enhancing the alignment and accuracy of language model responses under specific test conditions.

To conclude, the research introduced Direct Preference Optimization (DPO), a streamlined approach for training LLMs using a token-level Markov Decision Process. DPO integrates reward functions directly with policy outputs, bypassing the need for separate reward learning stages. The method demonstrated a 10-15% improvement in win rates using the Reddit TL;DR dataset, confirming its efficacy in enhancing language model accuracy and alignment with human feedback. These findings underscore the potential of DPO to simplify and improve the training processes of generative AI models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.