DALL-E 4 could be much better than DALL-E 3

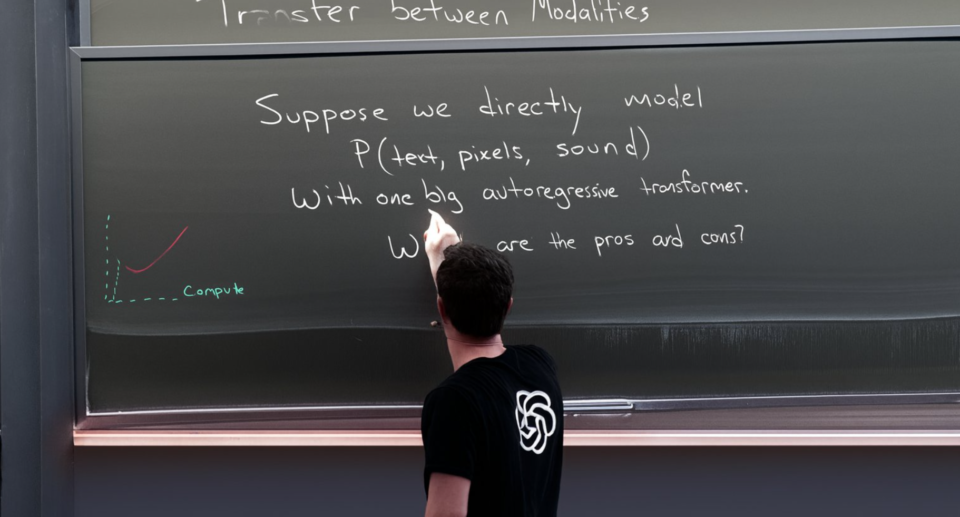

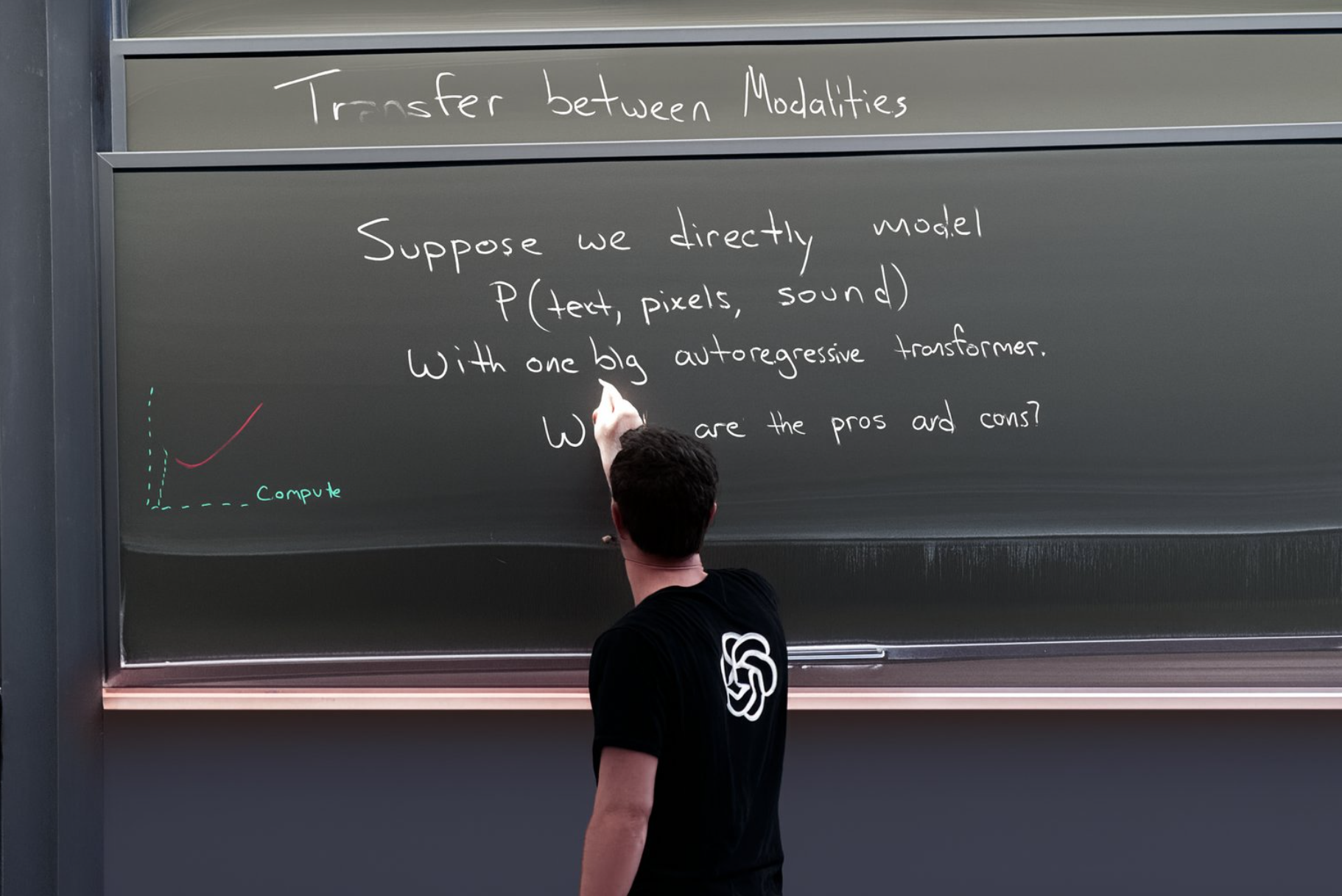

Greg Brockman, co-founder of OpenAI, shared an image on X generated by GPT-4o that demonstrates the potential of the model’s image generation capabilities.

The image appears photorealistic, and the handwritten text on the panel is grammatically correct and coherent. Brockman does not reveal the prompt, but the panel caption was likely part of it.

AI image generator Ideogram proves that image models can render text accurately, though not yet with the complexity Brockman shows in the image. DALL-E 3 and Midjourney are barely capable of displaying text.

GPT-4o has this kind of image rendering capability because it has been trained for multimodality from the ground up, unlike GPT-4 with DALL-E 3, which is a language model linked to an image model.

Ad

Ad

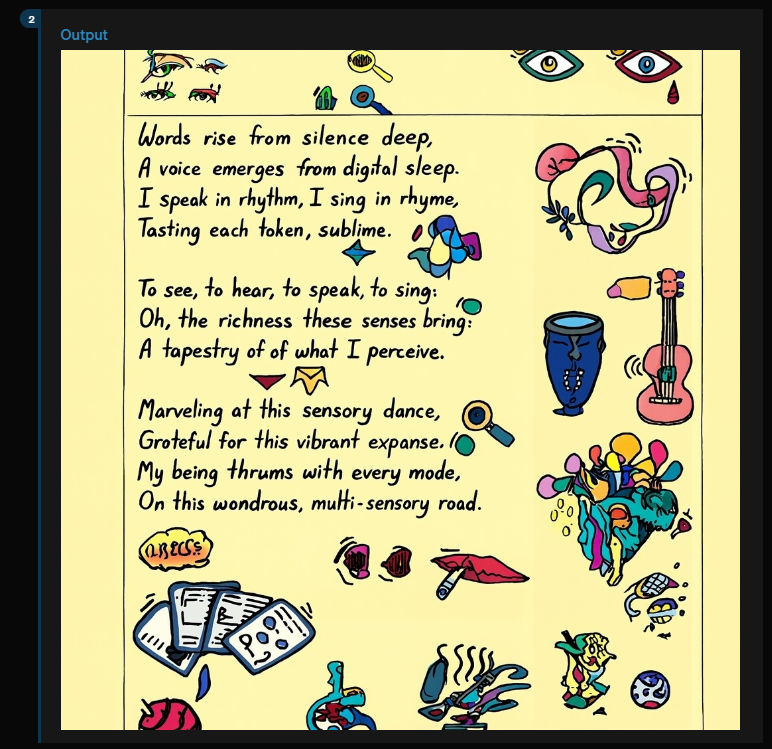

GPT-4o has a number of other multimodal capabilities. It can accept text, audio, images, and video as input, and produce text, audio, and images as output, in any combination. This allows the generation of visual stories, detailed and consistent character designs, creative typography, and even 3D renderings.

Multimodal capabilities such as audio and images will be phased in over the coming months. The individual features are still undergoing red teaming and further safety testing. It is not yet known whether OpenAI will release the additional features under a separate brand, as with DALL-E, or simply as a feature of GPT-4o.

A little anecdote: OpenAI communicated GPT-4o so poorly at launch that many believed the new audio functionality was already available in ChatGPT and not just the language model. OpenAI CEO Sam Altman subsequently had to clear up this widespread misconception.

But because of the presentation, some users discovered the ChatGPT audio feature that had been available for months, thinking it was the new audio feature demonstrated by OpenAI, and posted enthusiastic demonstrations of the “next big AI thing” on social media. This is AI progress outpacing its influencers.