Nested Learning: A New Machine Learning Approach for Continual Learning that Views Models as Nested Optimization Problems to Enhance Long Context Processing

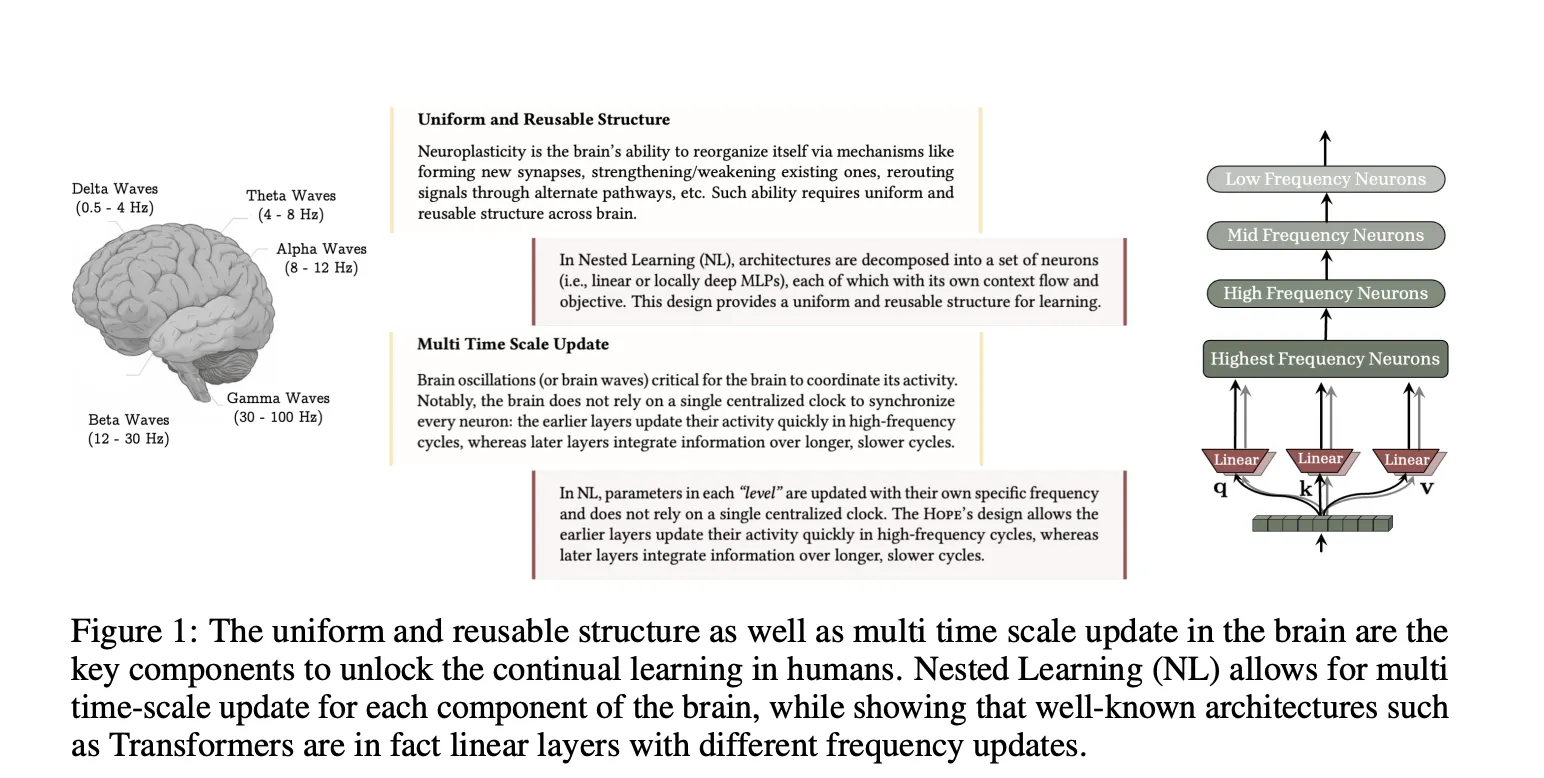

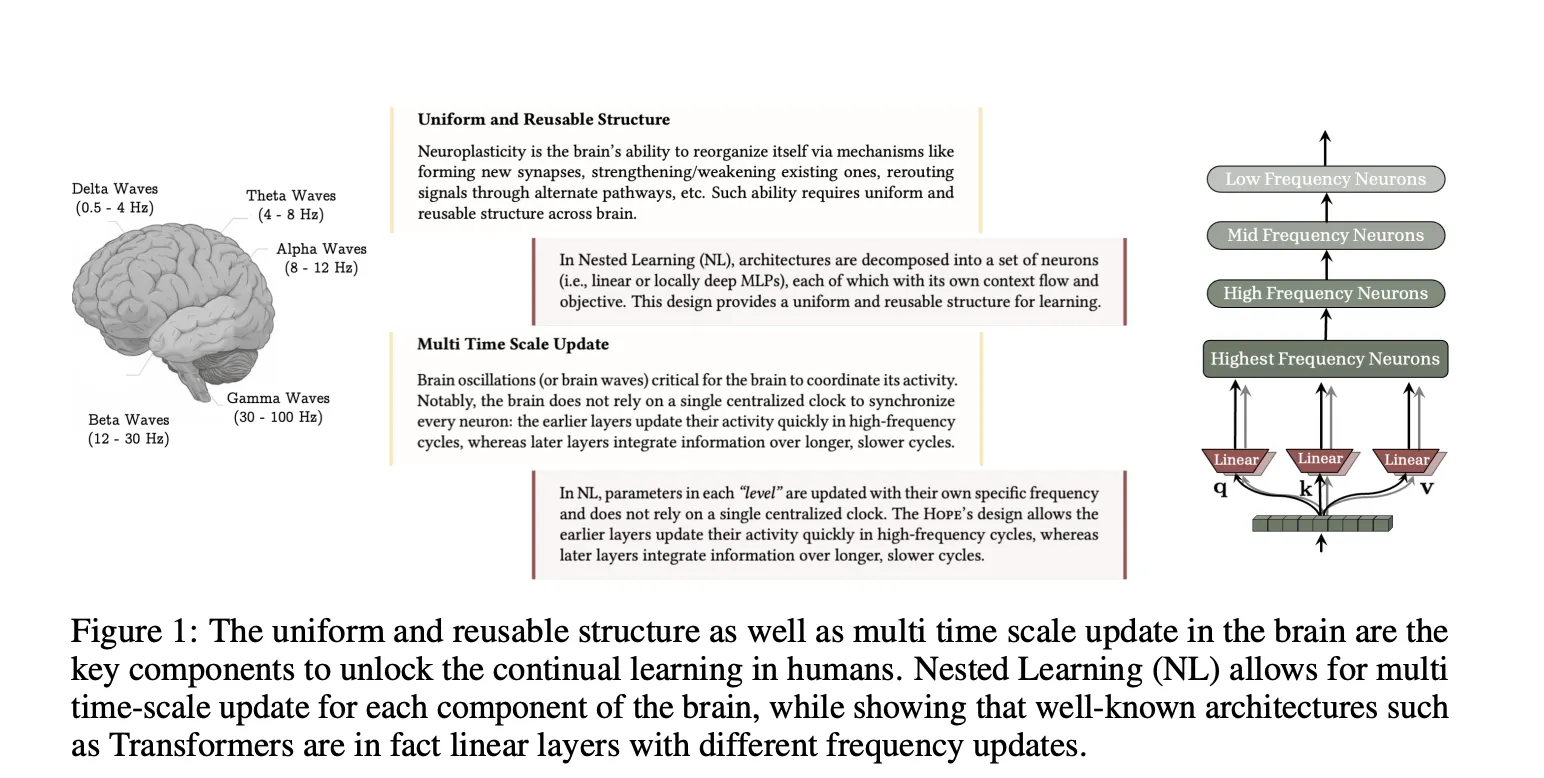

How can we build AI systems that keep learning new information over time without forgetting what they learned before or retraining from scratch? Google Researchers has introduced Nested Learning, a machine learning approach that treats a model as a collection of smaller nested optimization problems, instead of a single network trained by one outer loop. The goal is to attack catastrophic forgetting and move large models toward continual learning, closer to how biological brains manage memory and adaptation over time.

What is Nested Learning?

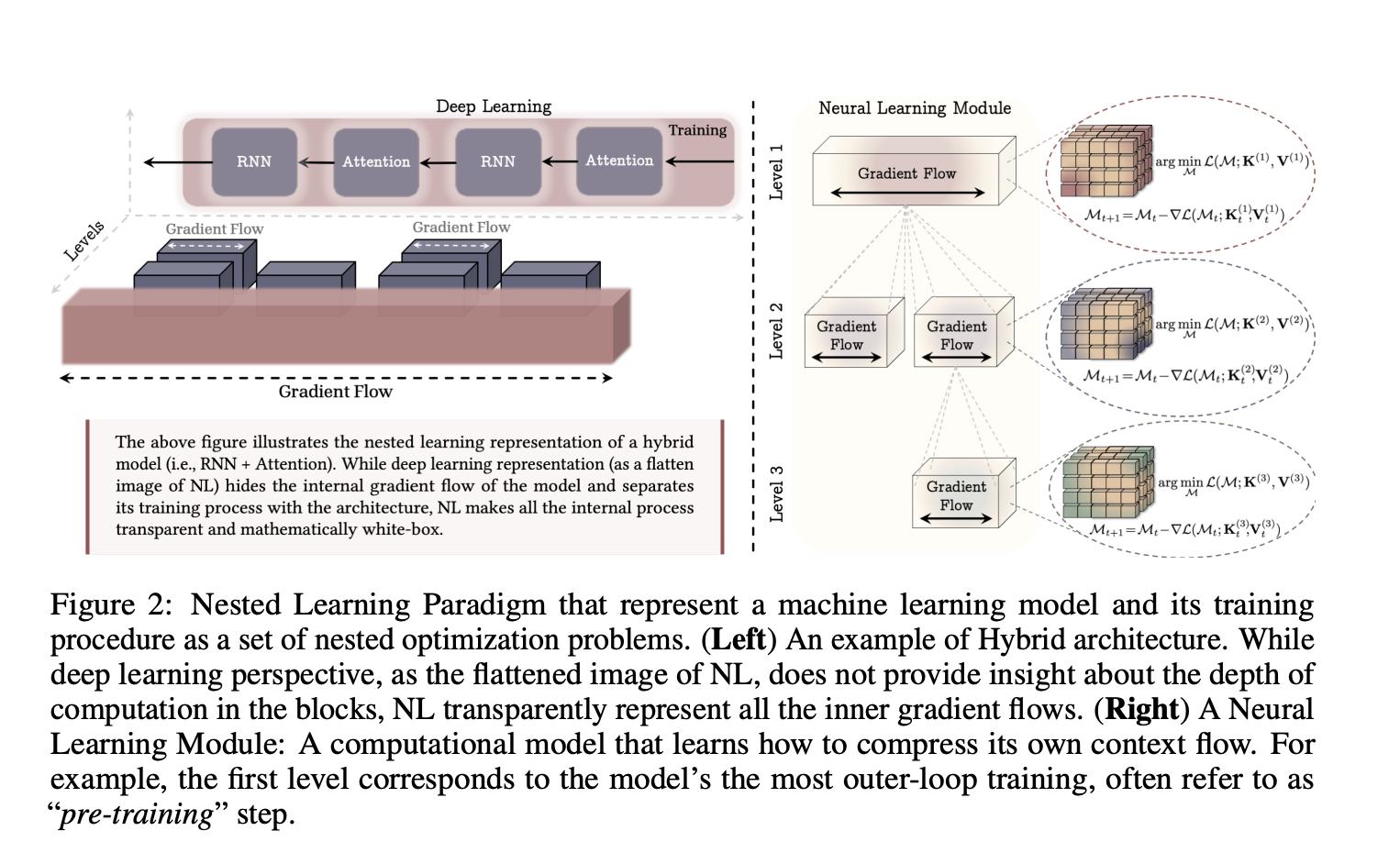

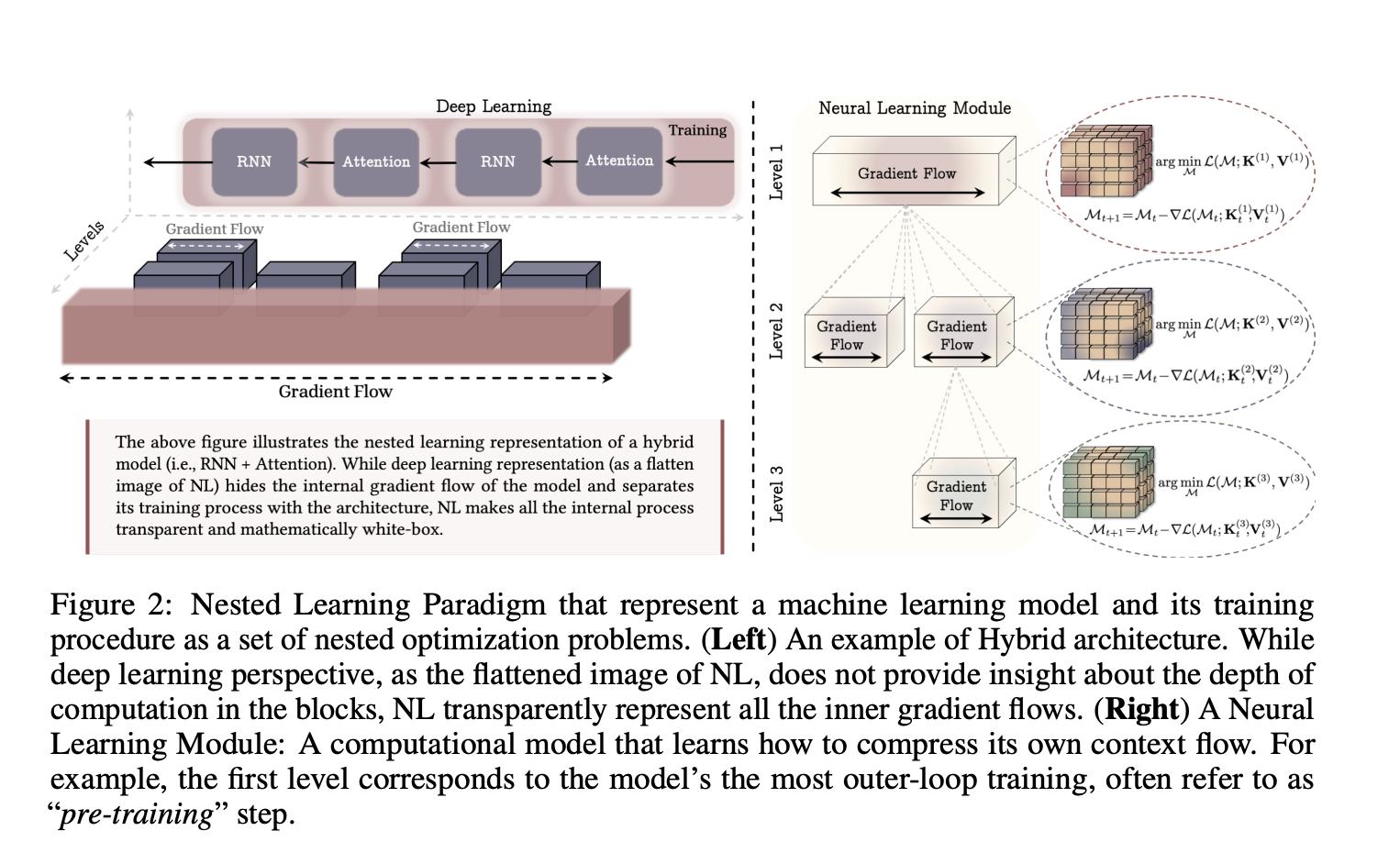

The research paper from Google ‘Nested Learning, The Illusion of Deep Learning Architectures’ models a complex neural network as a set of coherent optimization problems, nested or running in parallel, that are optimized together. Each internal problem has its own context flow, the sequence of inputs, gradients, or states that this component observes, and its own update frequency.

Instead of seeing training as a flat stack of layers plus one optimizer, Nested Learning imposes an ordering by update frequency. Parameters that update often sit at inner levels, while slowly updated parameters form outer levels. This hierarchy defines a Neural Learning Module, where every level compresses its own context flow into its parameters. The research team show that this view covers standard back-propagation on an MLP, linear attention, and common optimizers, all as instances of associative memory.

In this framework, associative memory is any operator that maps keys to values and is trained with an internal objective. The research team formalizes associative memory and then shows that back-propagation itself can be written as a one step gradient descent update that learns a mapping from inputs to local surprise signals, the gradient of the loss with respect to the output.

Deep Optimizers as Associative Memory

Once optimizers are treated as learning modules, Nested Learning suggests redesigning them with richer internal objectives. Standard momentum can be written as a linear associative memory over past gradients, trained with a dot product similarity objective. This internal objective produces a Hebbian like update rule that does not model dependencies between data samples.

The researcher team replaced this similarity objective with an L2 regression loss over gradient features, which yields an update rule that better manages limited memory capacity and better memorizes gradient sequences. They then generalize the momentum memory from a linear map to an MLP and define Deep Momentum Gradient Descent, where the momentum state is produced by a neural memory and can pass through a non linear function such as Newton Schulz. This perspective also recovers the Muon optimizer as a special case.

Continuum Memory System

In typical sequence models, attention acts as working memory over the current context window, while feedforward blocks store pre training knowledge as long term memory that is rarely updated after training. The Nested Learning researchers extend this binary view to a Continuum Memory System, or CMS.

CMS is defined as a chain of MLP blocks, MLP(f₁) through MLP(fₖ), where each block has its own update frequency and chunk size. For an input sequence, the output is obtained by sequentially applying these blocks. The parameters of each block are updated only every C^(ℓ) steps, so each block compresses a different time scale of context into its parameters. A standard Transformer with one feedforward block is recovered as the special case with k equal to 1.

This construction turns long term memory into a spectrum of levels across frequency, instead of a single static feedforward layer. The research connects this directly to multi time scale synaptic and system consolidation processes in the brain, where different parts of the system learn at different rates while sharing a common architecture.

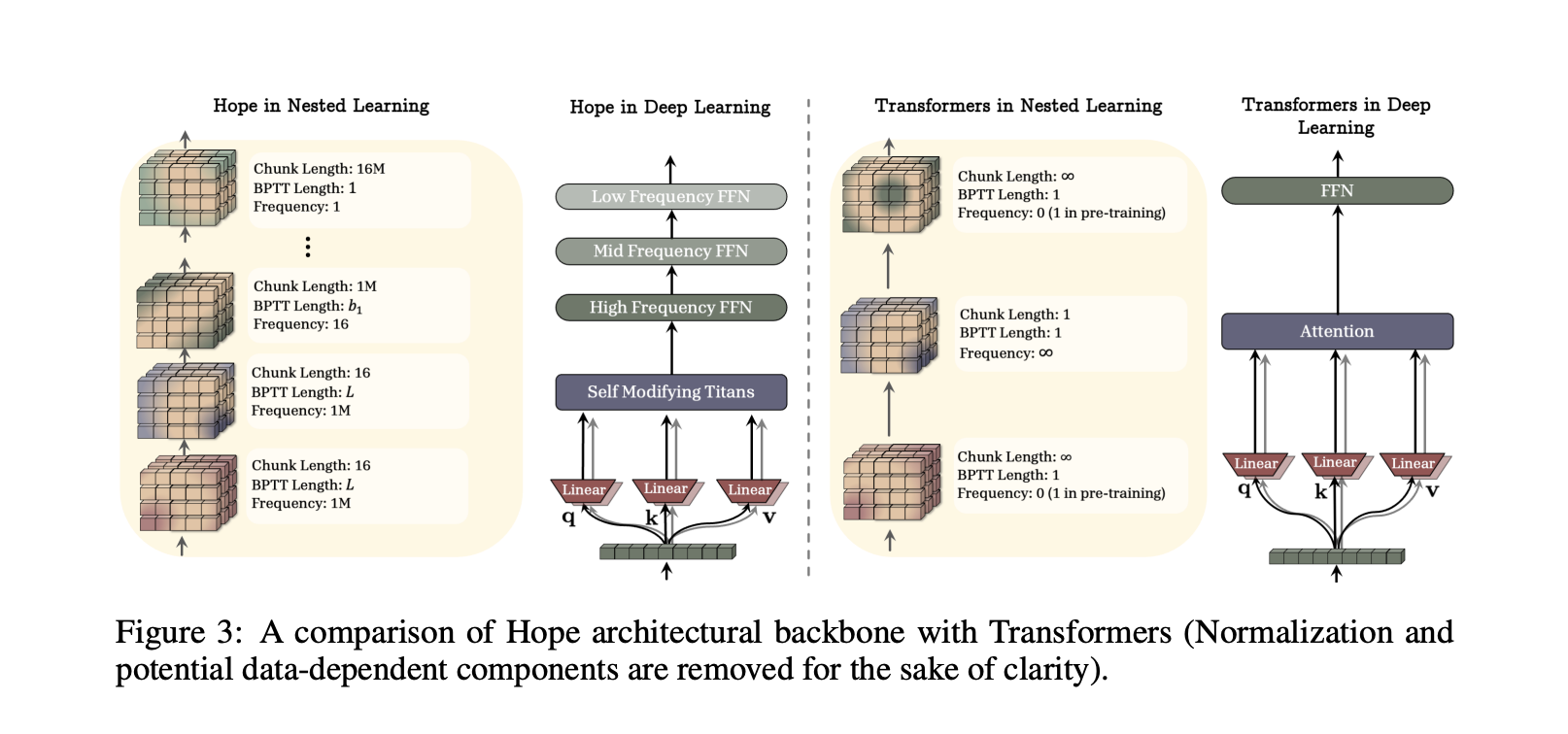

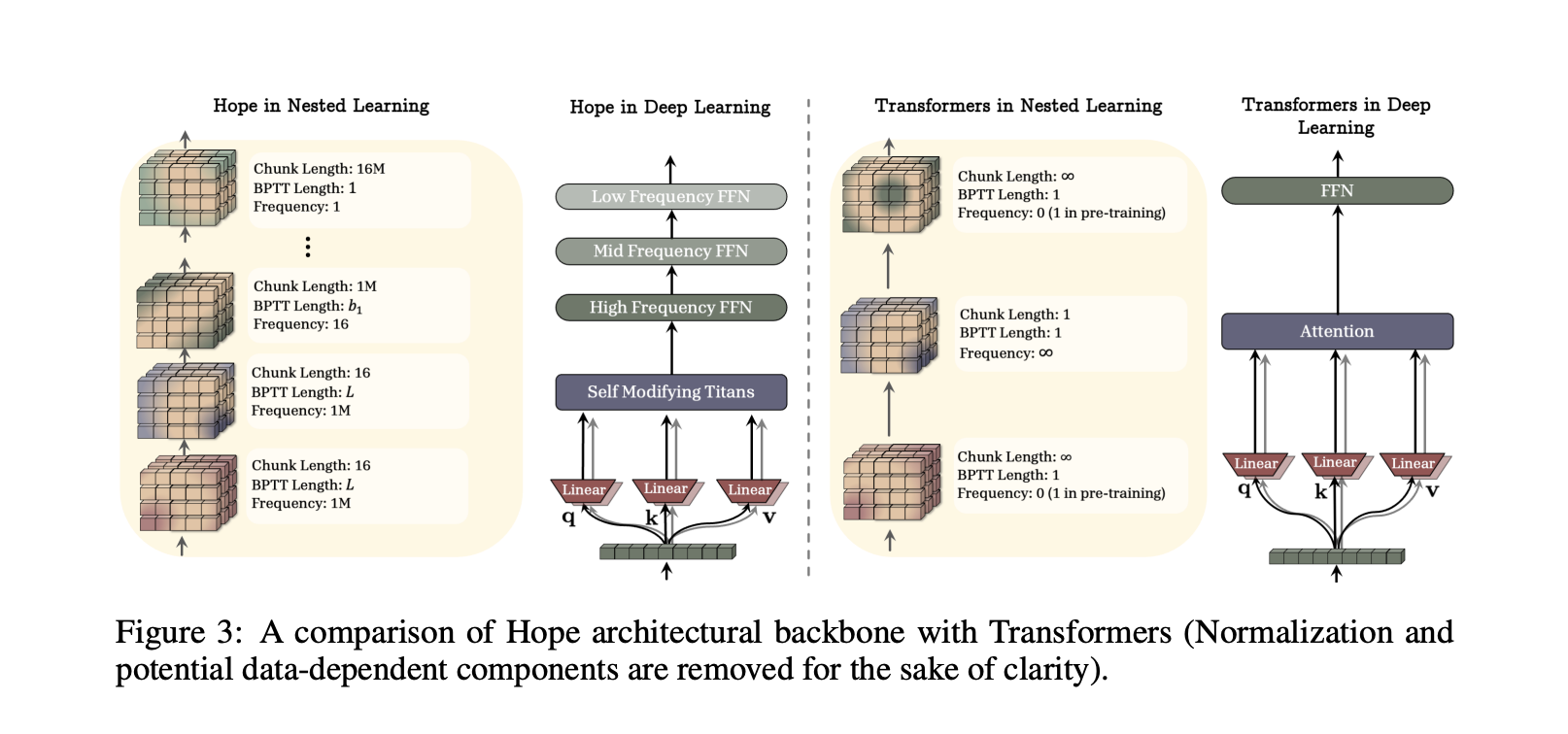

HOPE, A Self Modifying Architecture Built On Titans

To show that Nested Learning is practical, the research team designed HOPE, a self referential sequence model that applies the paradigm to a recurrent architecture. HOPE is built as a variant of Titans, a long term memory architecture where a neural memory module learns to memorize surprising events at test time and helps attention attend to long past tokens.

Titans has only 2 levels of parameter update, which yields first order in context learning. HOPE extends Titans in 2 ways. First, it is self modifying, it can optimize its own memory through a self referential process and can in principle support unbounded levels of in context learning. Second, it integrates Continuum Memory System blocks so that memory updates occur at multiple frequencies and scale to longer context windows.

Understanding the Results

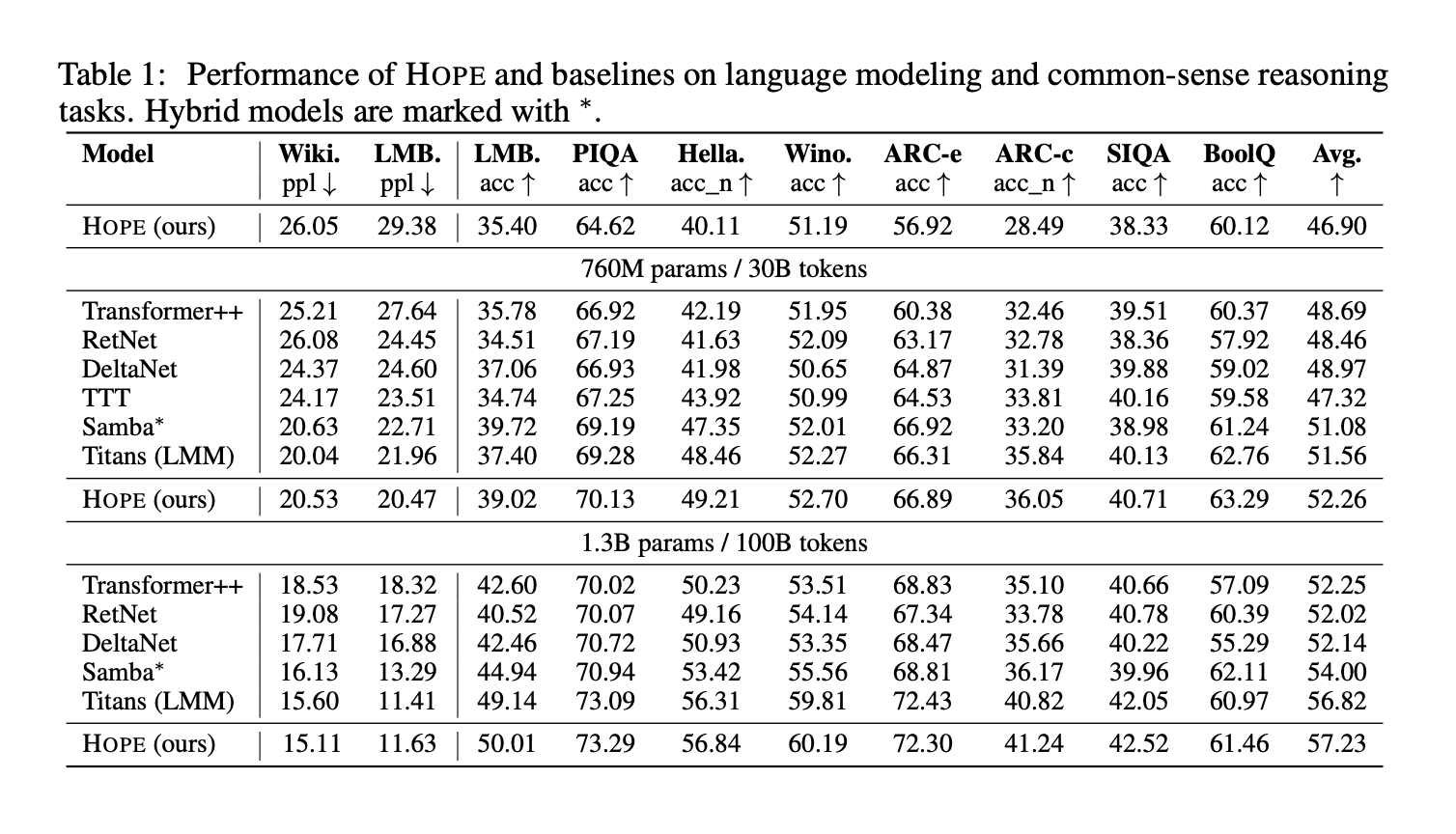

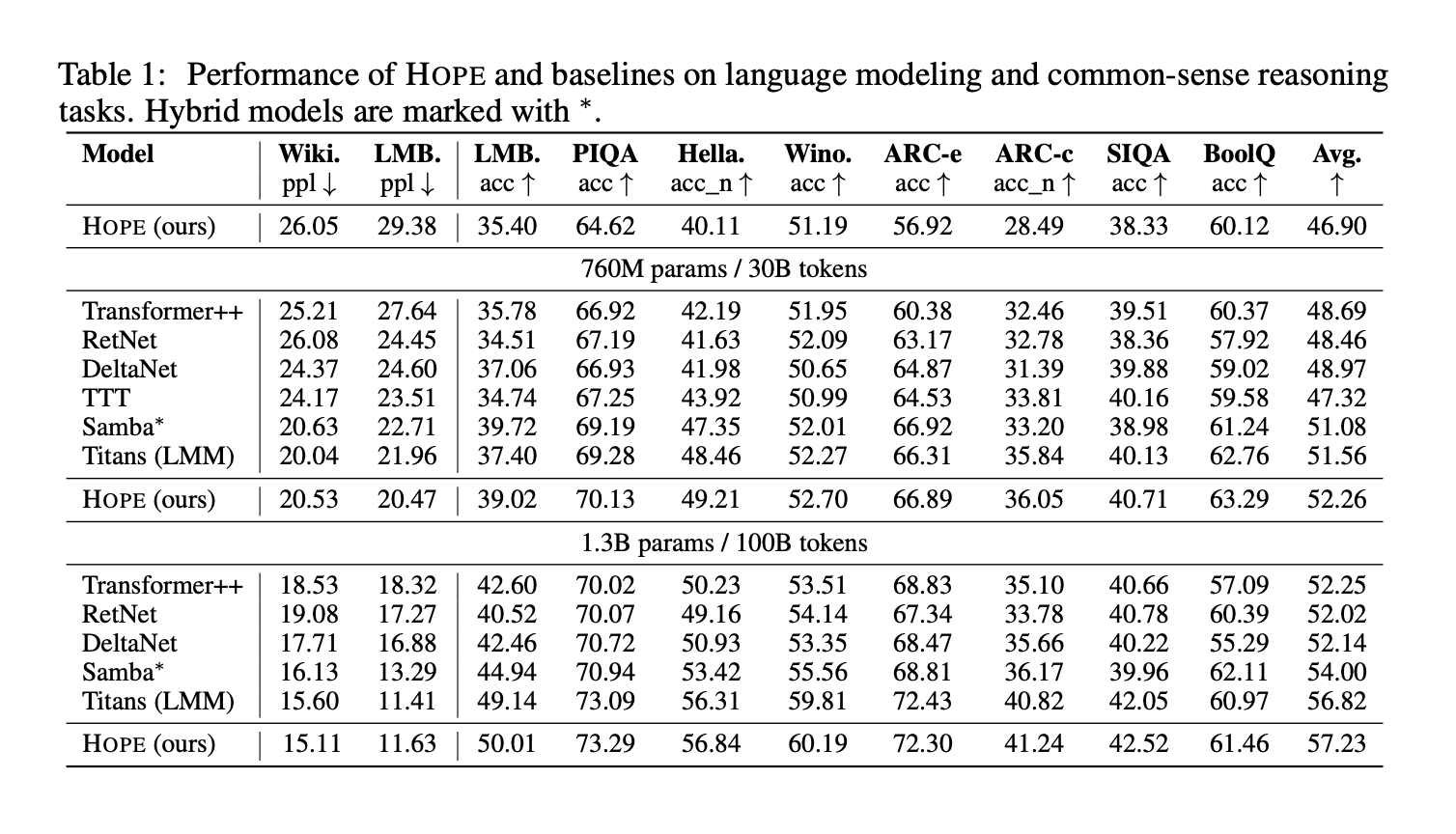

The research team evaluates HOPE and baselines on language modeling and common sense reasoning tasks at 3 parameter scales, 340M, 760M, and 1.3B parameters. Benchmarks include Wiki and LMB perplexity for language modeling and PIQA, HellaSwag, WinoGrande, ARC Easy, ARC Challenge, Social IQa, and BoolQ accuracy for reasoning. The below given Table 1 reports results for HOPE, Transformer++, RetNet, Gated DeltaNet, TTT, Samba, and Titans.

Key Takeaways

- Nested Learning treats a model as multiple nested optimization problems with different update frequencies, which directly targets catastrophic forgetting in continual learning.

- The framework reinterprets backpropagation, attention, and optimizers as associative memory modules that compress their own context flow, giving a unified view of architecture and optimization.

- Deep optimizers in Nested Learning replace simple dot product similarity with richer objectives such as L2 regression and use neural memories, which leads to more expressive and context aware update rules.

- The Continuum Memory System models memory as a spectrum of MLP blocks that update at different rates, creating short, medium, and long range memory rather than one static feedforward layer.

- The HOPE architecture, a self modifying variant of Titans built using Nested Learning principles, shows improved language modeling, long context reasoning, and continual learning performance compared to strong Transformer and recurrent baselines.

Nested Learning is a useful reframing of deep networks as Neural Learning Modules that integrate architecture and optimization into one system. The introduction of Deep Momentum Gradient Descent, Continuum Memory System, and the HOPE architecture gives a concrete path to richer associative memory and better continual learning. Overall, this work turns continual learning from an afterthought into a primary design axis.

Check out the Paper and Technical Details. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.