US unveils comprehensive AI strategy for national security

The US government has presented a comprehensive memorandum on artificial intelligence. The document lays out a detailed strategy for how AI should be used and controlled for national security purposes.

The US government wants to exert more control and coordination over the development and use of artificial intelligence (AI) in the national security domain. A new memorandum published by the White House sets out detailed guidelines and timelines for dealing with AI in the security sector for the first time.

“If we don’t act more intentionally to seize our advantages, if we don’t deploy AI more quickly and more comprehensively to strengthen our national security, we risk squandering our hard-earned lead,” warns Jake Sullivan, US National Security Advisor, in his speech at the National Defense University.

Three central pillars of the AI strategy

The memorandum is based on three main goals: First, the US aims to secure its global leadership position in AI development. Second, AI should be used specifically for national security purposes. Third, the US government wants to create a stable international framework for responsible AI development.

Ad

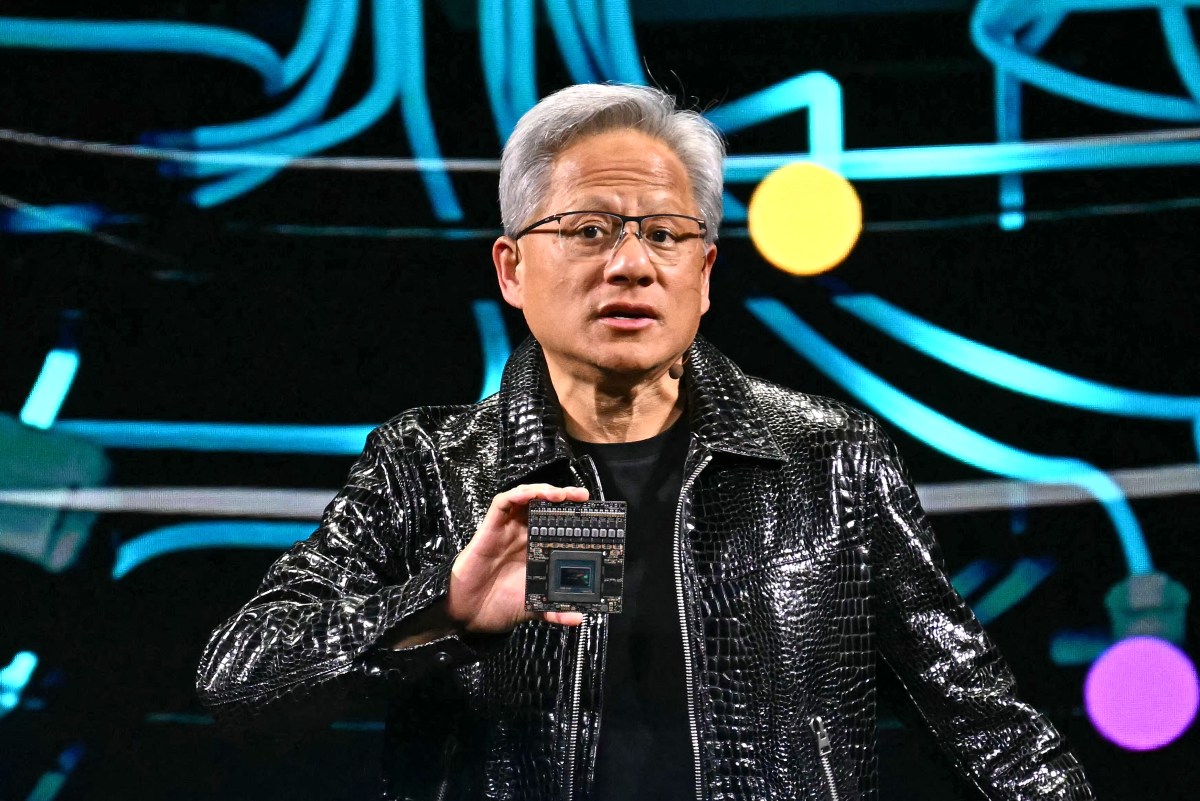

For the first goal, the memorandum outlines concrete measures: The US wants to continue attracting global AI talent, drive the development of advanced chips, and build the necessary infrastructure. “America has to continue to be a magnet for global, scientific, and tech talent,” Sullivan emphasizes.

The second goal focuses on the concrete use of AI for national security. Agencies should be able to deploy AI systems faster and more effectively while following strict security and ethics guidelines. “It means we need to make significant technical, organizational, and policy changes to ease collaboration with the actors that are driving this development,” says Sullivan.

The third goal centers on international cooperation. The US wants to work with allies to create standards for secure and trustworthy AI development. “Throughout its history, the United States has played an essential role in shaping the international order to enable the safe, secure, and trustworthy global adoption of new technologies while also protecting democratic values. These contributions have ranged from establishing nonproliferation regimes for biological, chemical, and nuclear weapons to setting the foundations for multi-stakeholder governance of the Internet. Like these precedents, AI will require new global norms and coordination mechanisms, which the United States Government must maintain an active role in crafting,” the memorandum states.

Timetable for implementation

The memorandum sets out a detailed timetable for implementation:

- 30 Days:

- DOD [Department of Defense] and ODNI [Office of the Director of National Intelligence] to establish working group to address AI procurement issues for national security systems and develop recommendations for acquisition processes

- 45 Days:

- Form AI National Security Coordination Group with Chief AI Officers from major agencies to harmonize policies for AI development, accreditation, acquisition, use, and evaluation across national security systems

- 90 Days:

- APNSA [Assistant to the President for National Security Affairs] to streamline visa processing for AI talent in sensitive technologies to strengthen US competitiveness

- NSC [National Security Council] and ODNI to review Intelligence Priorities Framework to better assess foreign threats to US AI ecosystem

- Coordination Group to establish Talent Committee to standardize hiring and retention of AI talent for national security

- 120 Days:

- State Department and agencies to identify AI training opportunities to increase workforce AI competencies

- NSA [National Security Agency] to develop capability for testing AI models’ potential for cyber threats

- DOE/NNSA [Department of Energy/National Nuclear Security Administration] to develop testing capability for AI nuclear/radiological risks including classified and unclassified tests

- State Department to create strategy for international AI governance norms promoting safety and democratic values

- 150 Days:

- DOD to evaluate feasibility of AI co-development with allies, including potential partners and testing vehicles

- National Manager for NSS [National Security Systems] to issue cybersecurity guidance for AI systems to enable interoperability

- 180 Days:

- CEA [Council of Economic Advisers] to analyze domestic and international AI talent market

- NEC [National Economic Council] to assess US private sector AI competitive advantages and risks

- DOE to launch pilot project for federated AI training and inference

- AISI [AI Safety Institute] to test frontier AI models for potential security threats

- AISI to issue guidance for testing and managing dual-use AI model risks

- AISI to develop benchmarks for assessing AI capabilities relevant to national security

- Department Heads to issue AI governance guidance aligned with Framework

- NSF [National Science Foundation] to develop best practices for publishing potentially sensitive AI research

- DOE to evaluate radiological/nuclear implications of frontier AI models

- 210 Days:

- Working group to provide recommendations to FARC [Federal Acquisition Regulatory Council] for improving AI procurement

- DOE/DHS/AISI to develop roadmap for evaluating AI models’ potential for chemical/biological threats

- 240 Days:

- Multiple agencies (DOD, HHS [Health and Human Services], DOE, DHS, NSF) to support AI efforts enhancing biosafety and biosecurity through various technical initiatives

- 270 Days:

- AISI to submit comprehensive report on AI safety assessments and mitigation efforts

- DOE to submit assessment on AI nuclear/radiological risks and recommended actions

- DOE to establish pilot project for chemical/biological threat testing

- Agency heads to submit detailed reports on AI activities and future plans

- 365 Days:

- Coordination Group to issue report on consolidation and interoperability of AI efforts across national security systems

- 540 Days:

- OSTP [Office of Science and Technology Policy], NSC staff, and Office of Pandemic Preparedness to develop guidance for safe in silico biological and chemical research

- Ongoing/Annual Requirements:

- Department Heads to review and update AI guidance annually

- AISI to submit annual reports on AI safety assessments

- DOE to submit annual assessments of nuclear/radiological risks

- Coordination Group to submit annual reports for 5 years on AI integration

- Agency heads to submit annual reports for 5 years on AI activities

- State Department, DOD, DHS to continuously facilitate entry of AI talent

- NSF to maintain NAIRR [National AI Research Resource] pilot project

- Agencies to conduct ongoing research on AI safety and security

- DOD and ODNI to continuously update guidance on AI interoperability

- AISI to serve as ongoing point of contact for AI safety concerns

New governance structures

The memorandum provides for the establishment of a national coordination group in which the leading AI experts from the security authorities are to work together. “We have to be faster in deploying AI in our national security enterprise than America’s rivals are in theirs,” Sullivan emphasizes.

Recommendation

Particular attention is being paid to the safety testing of AI systems. The AI Safety Institute (AISI) is to serve as a central point of contact for the evaluation of AI models. Both civilian and military risks will be examined. “During the early days of the railroads, for example, the establishment of safety standards enabled trains to run faster thanks to increased certainty, confidence, and compatibility,” Sullivan states.

International cooperation and competition

In an international context, the memorandum emphasizes the importance of cooperation with allies. At the same time, clear boundaries are drawn in technology transfer, especially vis-à-vis China.

“We need to balance protecting cutting-edge AI technologies on the one hand, while also promoting AI technology adoption around the world,” explains Sullivan. “Protect and promote. We can and must and are doing both.”