Top 10 Open-Source LLMs in 2025 and Their Use Cases

What if the future of AI wasn’t just about buying the most advanced models, but about collaborating and building on each other’s work?

In 2025, open-source LLMs are proving that AI doesn’t have to be confined behind paywalls.

With powerful, community-driven advancements, these models are accessible to all and ready to be adapted to specific needs.

Join us as we explore the top 10 open-source LLMs that are pushing the boundaries of what’s possible in AI and how they can be leveraged for everything from chatbots to advanced predictive models.

Also Read: What is LLM and How Do they Work?

Top 10 Open-Source LLMs in 2025

1. Llama 3 (Meta)

Meta’s Llama 3 is a significant leap forward in their ongoing Llama series.

This third version is designed to tackle some of the toughest challenges in AI, including improved performance on reasoning tasks and better handling of multilingual inputs.

It focuses on understanding the context better, processing complex data with increased accuracy, and optimizing training methods to reduce resource consumption.

Llama 3 is an improvement on its antecedents with additional abilities to handle domaindomai specific customization, making it more flexible in catering tocateringto business requirements.

Key Features:

- Industry-Leading Performance: Llama 3 provides best-in-class natural language processing capabilities with rich comprehension.

- Scalability: Designed to scale well for large datasets and diverse deployment environments.

- Open-Source Adaptability: Entirely open-source, offering users liberty to personalize and refine.

- Advanced Multilingual Support: Llama 3 has support for various languages for a global audience.

- Optimized Efficiency: Efficient processing with reduced computational expense in comparison to other large models.

Use Cases:

- Multilingual Chatbots: Utilized for customer service use cases that need multilingual support.

- Text Summarization: Assists in summarizing long documents into short summaries.

- Machine Translation: Translates content from one language to another efficiently.

- Sentiment Analysis: Utilized for analyzing user sentiment in reviews or social media.

- Personalized Content Creation: Produces customized content for promotional and advertising needs.

2. DeepSeek-R1

DeepSeek-R1 represents a breakthrough in the open-source LLMs designed for deep reasoning & problem-solving tasks.

It was developed with a focus on logical deduction & advanced computational tasks, such as code generation, mathematical analysis, & even scientific modeling.

DeepSeek-R1’s ability to process highly technical data makes it a standout in fields that demand precision & analytical power.

Key Features:

- Strong Semantic Search: Supports rich, contextual search functionality.

- Designed for Large-Scale Data: Optimized to process large datasets with ease.

- Customizable Training: Fine-tuning the model for particular industries or use cases is easy.

- Fast Response Time: Rapid retrieval of useful information from vast knowledge bases.

Use Cases:

- Smart Search Engines: Powers sophisticated search capability in websites and databases.

- Data Analytics: Interprets and analyzes large datasets for actionable information.

- Content Recommendation Systems: Suggests articles, products, or services based on user interest.

- Customer Service Automation: Automates customer queries with more precise & context-sensitive responses.

- Predictive Modeling: Aids businesses in predicting trends through data-driven insights.

Also Read: What is Deepseek R1, features and Applications?

3. Mistral 7B v2

Mistral 7B v2 focuses on balancing compactness with performance, offering a lightweight solution that doesn’t compromise on its capabilities.

This model’s speed & efficiency make it a great option for real-time scenarios where inference has to be done quickly.

The model performs very well in zero-shot learning, where it is able to provide correct responses without task-specific fine-tuning beforehand.

Key Features:

- High-Performance NLP: Optimized for high-level NLP tasks such as text generation and question answering.

- Scalable Architecture: Easily scalable for enterprise-level deployment.

- Customizable Outputs: Users can fine-tune responses based on input context.

- Efficient Resource Usage: Designed to provide high performance without excessive computational resources.

- Advanced Few-Shot Learning: Capable of learning from minimal examples to perform various tasks.

Use Cases:

- Content Generation: Automatically generates high-quality articles, blogs, and stories.

- Question Answering: Assists with automated Q&A systems in various industries.

- Summarization Tools: Condenses documents or reports into brief summaries.

- Search Assistance: Improves search engines by understanding the context behind queries.

- Personal Assistant Apps: Powers intelligent virtual assistants for task automation.

4. Falcon 40B

Falcon 40B, which is developed by the Technology Innovation Institute (TII), provides superior performance on a variety of NLP tasks such as language modeling, translation, text generation, & summarization.

Falcon 40B, with 40 billion parameters, is a large model that provides considerable advances in contextual awareness and the capacity to be coherent over longer conversations or documents.

Key Features:

- Massive Scale: With 40 billion parameters, Falcon 40B is a cutting-edge large model for NLP tasks.

- Multi-Task Learning: Supports multiple tasks simultaneously, such as translation and summarization.

- High Precision: Offers highly accurate responses, ideal for business-critical applications.

- Robust Language Understanding: Deep understanding of complex sentence structures and meanings.

- Pre-Trained for Efficiency: Offers pre-trained models for faster deployment.

Use Cases:

- Advanced Chatbots: Used to create highly responsive and intelligent customer support bots.

- Content Creation for Marketing: Automatically generates product descriptions, blog posts, and more.

- Automated Language Translation: Provides high-quality translations for global communication.

- Medical Research: Assists researchers by analyzing & summarizing complex scientific papers.

- Financial Forecasting: Helps in predictive analysis for financial markets based on historical data.

5. Bloom 2

Bloom 2 is the next-generation open-source Bloom model built by the BigScience initiative.

Bloom 2 places significant focus on open-access AI with high performance in a wide range of tasks, and it’s also transparent & ethical.

Bloom 2 also shines when it comes to multilingual support, & therefore it is widely used in global applications.

Key Features:

- Open Collaboration Model: Emphasizes community-based development for improved access to leading-edge technology.

- Multilingual Ability: Supports different languages, enhancing usability in diverse regions.

- Scalable and Flexible: Can be optimized for particular industries & tasks.

- Energy-Efficient: Engineered for low power consumption at high performance.

- Transparent AI Design: Built with explainability in mind, enabling users to track & comprehend AI decisions.

Use Cases:

- Translation Services: Offers real-time translation for business and educational platforms.

- Cross-Cultural Marketing: Helps brands tailor marketing strategies for different cultural contexts.

- Collaborative Research: Used for collaborative projects involving text analysis and synthesis.

- Voice Assistants: Powers smart devices with multilingual support for varied user needs.

- Intelligent Content Moderation: Helps in moderating user-generated content by identifying harmful content in multiple languages.

6. GPT-J 3.5 (EleutherAI)

GPT-J 3.5, created by EleutherAI, is a highly respected open-source model offering competitive performance like proprietary models such as GPT-3.

Its emphasis on accessibility and cutting-edge innovation among the open-source community makes it an influential platform for developers & researchers.

GPT-J 3.5 excels most at producing natural, coherent language, making it best suited to creative & conversational applications.

Key Features:

- High Text Generation Quality: Delivers coherent and high-quality long-form text.

- Adaptable to Specific Domains: Can be fine-tuned for niche tasks such as legal or medical writing.

- Open-Source Flexibility: Fully open-source, encouraging community contributions and customizations.

- Efficient for Large-Scale Text: Handles large-scale text generation without overloading systems.

- Advanced NLP Capabilities: Understands context deeply and can generate relevant responses.

Use Cases:

- Content Creation: Ideal for generating blog posts, reports, and even creative writing.

- Chatbots: Powers intelligent customer support bots with conversational AI capabilities.

- Automated Report Generation: Helps businesses in automating the creation of analytical reports.

- E-learning Platforms: Generates learning materials and explanations for online courses.

- Script Writing: Assists in generating scripts for films, TV shows, or video content.

7. Dolly 3.0 (Databricks)

Dolly 3.0 by Databricks is an expert open-source model that is very flexible to fit particular business requirements, particularly in scenarios where data privacy & customization are most essential.

Dolly 3.0 has been tuned to provide dramatic enhancements in data management & contextual awareness.

Key Features:

- Business-Oriented: Tailored for enterprise solutions with a focus on customization.

- Highly Secure: Prioritizes data privacy & compliance, essential for sensitive industries.

- Adaptability: Capable of adapting to different industry-specific needs & goals.

- Fast Data Processing: Designed to handle & process large amounts of business data efficiently.

- Optimized for Analytics: Integrates seamlessly into business intelligence workflows, enhancing data-driven decision-making.

Use Cases:

- Predictive Analytics: Helps businesses forecast trends & optimize strategies based on data insights.

- Custom Chatbots: Provides industry-specific customer support solutions.

- Financial Risk Analysis: Analyzes financial markets & provides risk assessments.

- Supply Chain Optimization: Automates and optimizes logistics & supply chain operations.

- Healthcare Data Analytics: Assists healthcare providers in analyzing patient data & predicting outcomes.

8. Grok AI

Grok AI, developed by Grok Networks, is designed to excel in highly technical environments and is specifically optimized for machine learning operations (MLOps).

It focuses on assisting with model deployment, data pipelines, and model training, making it a useful tool for organizations working with large-scale AI systems.

Key Features:

- MLOps Integration: Strong focus on simplifying the deployment and management of machine learning models.

- Scalability: Efficiently scales across large datasets and diverse infrastructure environments.

- Real-Time Data Processing: Handles real-time data streams, providing immediate insights.

- Advanced Model Training: Facilitates advanced custom training for specific business needs.

- Cloud-Native: Optimized for cloud environments, ensuring flexibility and cost efficiency.

Use Cases:

- Real-Time Fraud Detection: Analyzes transactional data in real-time to detect potential fraud.

- Predictive Maintenance: Predicts equipment failures and maintenance schedules in industries like manufacturing.

- Market Trend Analysis: Helps businesses identify emerging trends and shifts in consumer behavior.

- AI for Automation: Automates routine tasks such as data entry or customer response systems.

- Healthcare Diagnostics: Assists in processing patient data to detect conditions early.

9. Gemma 2.0 Flash (Google)

Gemma 2.0 Flash, built by Google, is an enhanced version of their open-source Gemma LLM with greater ability in semantic search & multimodal comprehension.

Gemma 2.0 Flash offers more advanced features compared to its predecessor, with the added ability to process both visual & text inputs, closing the gap between media types.

Key Features:

- Multimodal Inputs: Processes both text & images, enabling more comprehensive applications.

- Semantic Understanding: Prioritizes understanding the meaning behind queries and inputs.

- Fast and Efficient: Processes input quickly, making it ideal for real-time applications.

- Lightweight: Optimized for high performance with a minimal computational footprint.

- Advanced Search Capabilities: Offers advanced search functionality based on semantic rather than keyword matching.

Use Cases:

- Content Moderation: Monitors and filters harmful or inappropriate content on social platforms.

- Personalized Marketing: Delivers personalized advertisements and content based on text and images.

- Visual Search Engines: Provides better search results by understanding both text and images.

- Customer Service: Powers support systems that can understand customer queries in both text and image format.

- Interactive Storytelling: Used in creative applications where text and images are combined for immersive experiences.

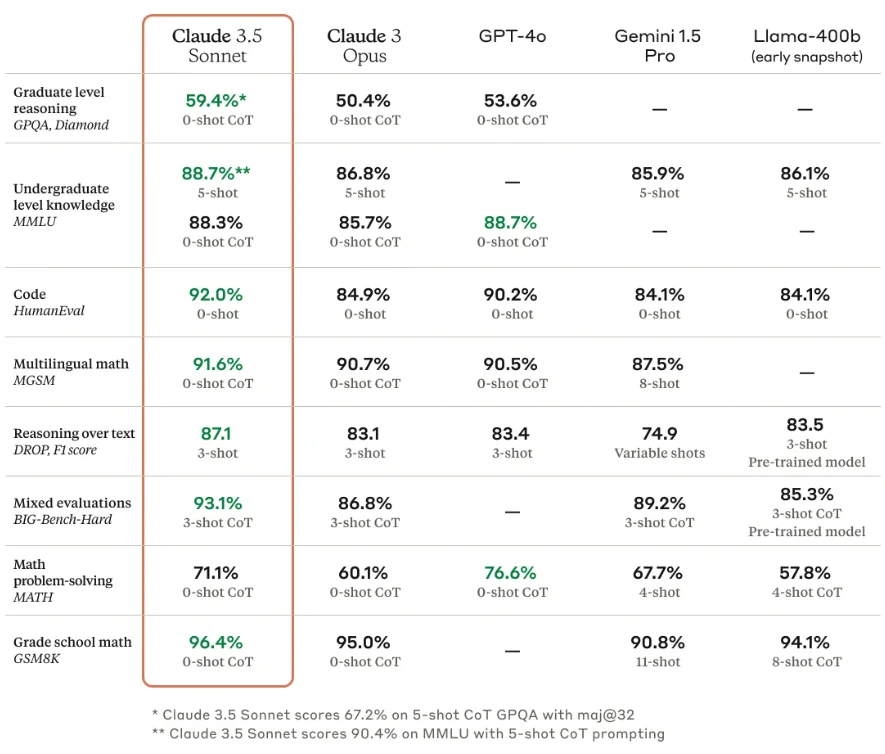

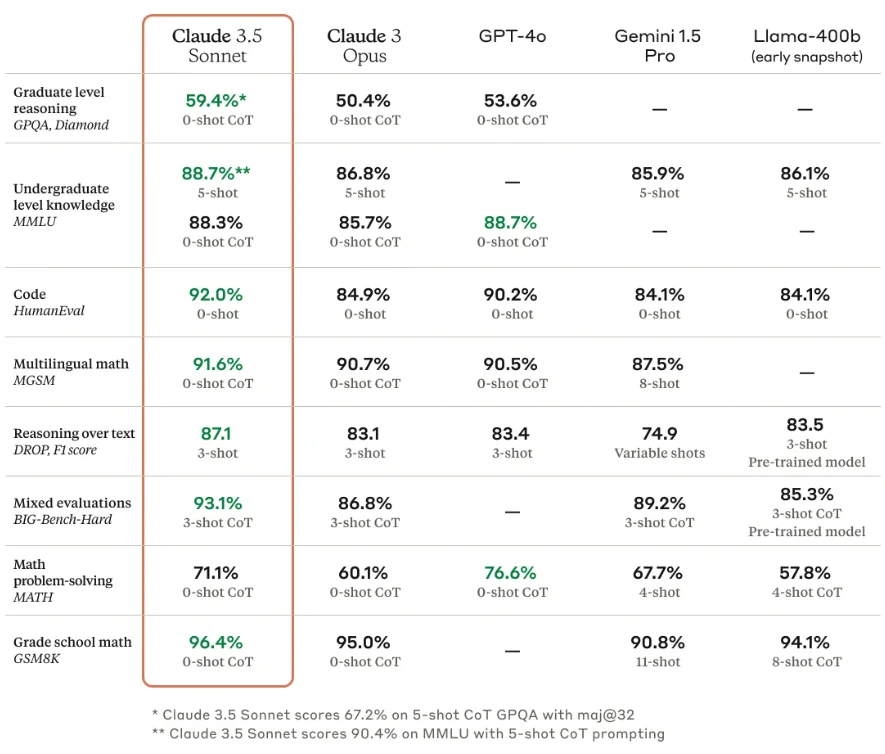

10. Claude 3.5 Sonnet

Claude 3.5 Sonnet, created by Anthropic, is a special LLM that is intended to prioritize safety and ethical aspects in AI.

It prioritizes a secure and responsible method of applying large language models.

The framework of this model is specially designed to prevent dangerous outputs and ensure its use is in accordance with ethical principles.

Key Features:

- Ethical AI Design: Built to prioritize safety, minimizing harmful outputs and bias.

- Contextual Integrity: Ensures the response aligns with the context, avoiding misleading or irrelevant content.

- Human-AI Collaboration: Encourages safer, more collaborative AI-human interaction.

- Bias Mitigation: Focuses on reducing inherent biases in AI systems.

- Transparency: Clear decision-making process for better accountability in output.

Use Cases:

- Ethical Content Creation: Generates text that adheres to ethical guidelines for safe publishing.

- Legal Document Review: Assists in ensuring legal documents adhere to standards without bias or errors.

- Medical Advice: Provides safe, reliable medical information while ensuring accuracy and safety.

- Social Media Monitoring: Helps monitor for harmful content or behavior on platforms.

- Corporate Compliance: Ensures business practices align with legal and ethical standards by analyzing company operations.

Learn How to Manage and Deploy Large Language Models

Comparison of Top 10 Open-Source LLMs for 2025: Performance, Data, and Use Cases

| LLM | Performance Benchmarks (Speed, Accuracy, Memory Usage) | Training Data & Model Size | Best Use Cases for Different Domains |

| Llama 3 | Speed: Fast processing Accuracy: High accuracy in multilingual tasks Memory Usage: Moderate (optimized for efficiency) |

Model Size: Large (billions of parameters) Training Data: Diverse multilingual datasets |

Business: Customer support chatbots Education: Text summarization and translation Research: Sentiment analysis |

| DeepSeek-R1 | Speed: Efficient for large-scale searches Accuracy: High contextual accuracy Memory Usage: Moderate (optimized for search tasks) |

Model Size: Medium to largeTraining Data: Domain-specific knowledge and semantic data | Business: Intelligent search engines, recommendation systems Research: Data analytics |

| Mistral 7B v2 | Speed: Fast response times for NLP tasks Accuracy: Excellent for text generation and QA Memory Usage: Low to moderate |

Model Size: 7B parameters Training Data: Large web corpus and diverse NLP datasets |

Business: Automated content generation Education: Personalized learning materials Research: Text summarization |

| Falcon 40B | Speed: Optimized for high-performance tasks Accuracy: Superior accuracy for text analysis Memory Usage: High (large-scale model) |

Model Size: 40B parameters Training Data: Extensive datasets, focused on large-scale learning |

Business: Advanced chatbots and marketing Education: Translation, intelligent tutoring Research: Scientific text analysis |

| Bloom 2 | Speed: Quick processing Accuracy: High precision in multilingual tasks Memory Usage: Moderate |

Model Size: Medium to large Training Data: Collaborative datasets with multilingual support |

Business: Cross-cultural marketing, multilingual content Education: Language learning, curriculum creation Research: Collaborative research |

| GPT-J 3.5 (EleutherAI) | Speed: Moderate to fast generation speed Accuracy: Excellent for natural language generation Memory Usage: Moderate |

Model Size: 6B parameters Training Data: Diverse internet datasets and conversational data |

Business: Content creation, chatbots Education: E-learning platforms Research: Scriptwriting, document automation |

| Dolly 3.0 (Databricks) | Speed: Optimized for business environments Accuracy: High in business-specific contexts Memory Usage: Moderate |

Model Size: MediumTraining Data: Industry-specific data (finance, healthcare) | Business: Predictive analytics, automation Research: Data analysis in specialized fields like healthcare and finance |

| Grok AI | Speed: High-speed processing for large data Accuracy: Very accurate for real-time data Memory Usage: High (optimized for cloud) |

Model Size: Large Training Data: Domain-specific, real-time data sources (financial, health, etc.) |

Business: Real-time fraud detection, predictive maintenance Research: Market trend analysis |

| Gemma 2.0 Flash (Google) | Speed: Fast and efficient for multimodal inputs Accuracy: Very high for search tasks Memory Usage: Low to moderate |

Model Size: Medium Training Data: Multimodal data (text + images) |

Business: Content moderation, personalized marketing Education: Interactive learning Research: Cross-modal research |

| Claude 3.5 Sonnet | Speed: Moderate speed, optimized for ethical tasks Accuracy: High with ethical guidelines Memory Usage: Moderate to high |

Model Size: Medium to large Training Data: Data curated for ethical AI principles and safe responses |

Business: Ethical content creation, compliance Education: Safe AI-based learning environments Research: Bias-free text analysis |

Criteria for Selecting the Top Open-Source LLMs

1. Performance Benchmarks

Evaluate key performance metrics like accuracy, efficiency, and speed across various tasks such as text generation, translation, summarization, and question answering.

High-performing models should excel in producing coherent, contextually relevant outputs and handle large datasets with minimal latency.

2. Ease of Fine-tuning and Deployment

The model should allow easy fine-tuning for specific domains or tasks without requiring significant computational resources.

Pre-trained models should be easy to adapt to unique datasets or use cases, & deployment should be straightforward, whether on cloud platforms, local servers, or edge devices.

3. Licensing and Usage Restrictions

It’s crucial to check the model’s license (e.g., Apache, MIT, GPL) to ensure compatibility with your intended use, whether for research, commercial purposes, or integration into proprietary products.

Some open-source LLMs may come with usage restrictions, such as prohibiting certain types of content generation or redistribution.

4. Real-World Use and Adoption

Think about how extensively the model is applied in the real world.

Models with extensive real-world use cases (e.g., customer support chatbots, content generation, healthcare) tend to have strong community backing & a history of real-world performance.

Large-scale adoption & success stories tend to mean that the model has been tested & tuned for real-world, practical, large-scale deployment.

Also Read: Top AI Tools to Increase Productivity

Conclusion

Open-source LLMs offer a wealth of opportunities for businesses, researchers, and developers alike. Instead of relying on closed-door models, today’s AI enthusiasts can collaborate, customize, and innovate using community-driven technologies like Llama 3, DeepSeek-R1, Mistral 7B v2, and beyond.

If you’re ready to harness these AI breakthroughs for your own projects—whether it’s building advanced chatbots, automating data analytics, or designing intelligent virtual assistants—our AI courses have you covered. Learn, integrate them into real-world applications, and become a leader in the next wave of AI innovation.

![How to Explain Reasons for Job Change in Interviews? [2024]](https://metaailabs.com/wp-content/uploads/2024/04/How-to-Explain-Reasons-for-Job-Change-in-Interviews-2024.png)