Simple Guide to Training Your Team to Use ChatGPT Effectively

Many teams in the workplace now use ChatGPT since it allows them to complete writing projects fast, consider complex issues, and easily handle tasks that must be repeated. We now use AI regularly to write emails, take meeting notes, and generate new ideas.

Yet, just like any other strong tool, our approach matters. If we don’t put in the necessary safeguards, we can give out private data, use AI without checking, or use AI technologies against company policies or ethics. As a result, every organization must ensure its teams are using ChatGPT responsibly.

We’ll explain in this article why responsible use is so essential, which principles you should follow, what training program is necessary, how to establish set guidelines, and the primary resources to support your trainee staff in using social media safely.

Why does the Right Use of ChatGPT Matter?

Knowing the limitations of ChatGPT is essential before you begin using it.

Dealing with the issue of protecting personal data matters a lot. If you communicate sensitive information in ChatGPT, it might end up leaving the company by accident.

And lastly, there’s hallucination, when the AI creates information it believes to be true but turns out to be false. Rushing through the process may lead you to rely on the wrong data.

Another problem is bias. ChatGPT has been trained using a lot of data from the internet so it may display or enhance any biases in the information. After using the tool for a while, people may start depending on it so much that they skip critical thinking and judgment.

These concerns also involve questions of regulations and ethics. Mistakes concerning your privacy, co-speaker, or any related online requirements can have both legal and public image issues for the company.

If AI is used irresponsibly, it can break trust, result in general issues and invite expensive blunders. Therefore, we should ensure that teams are informed and guided about how to use ChatGPT safely and adequately.

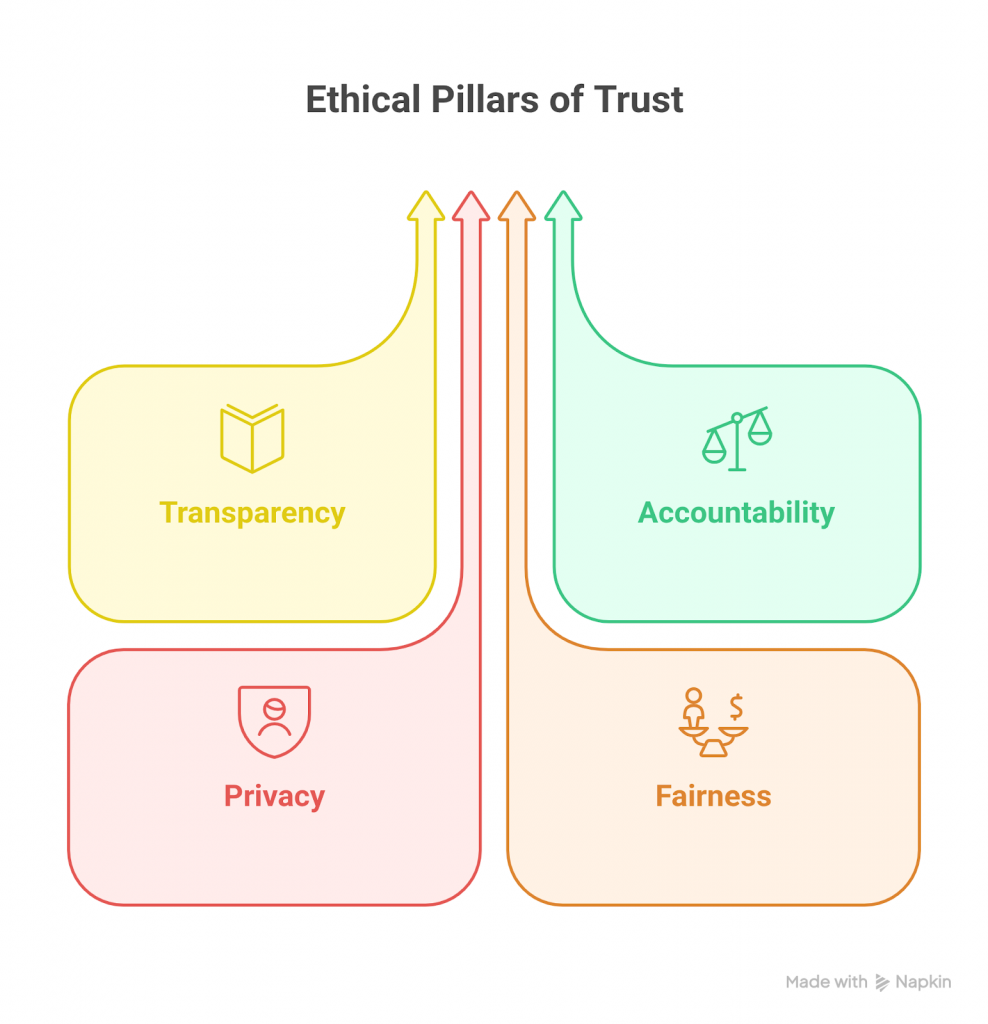

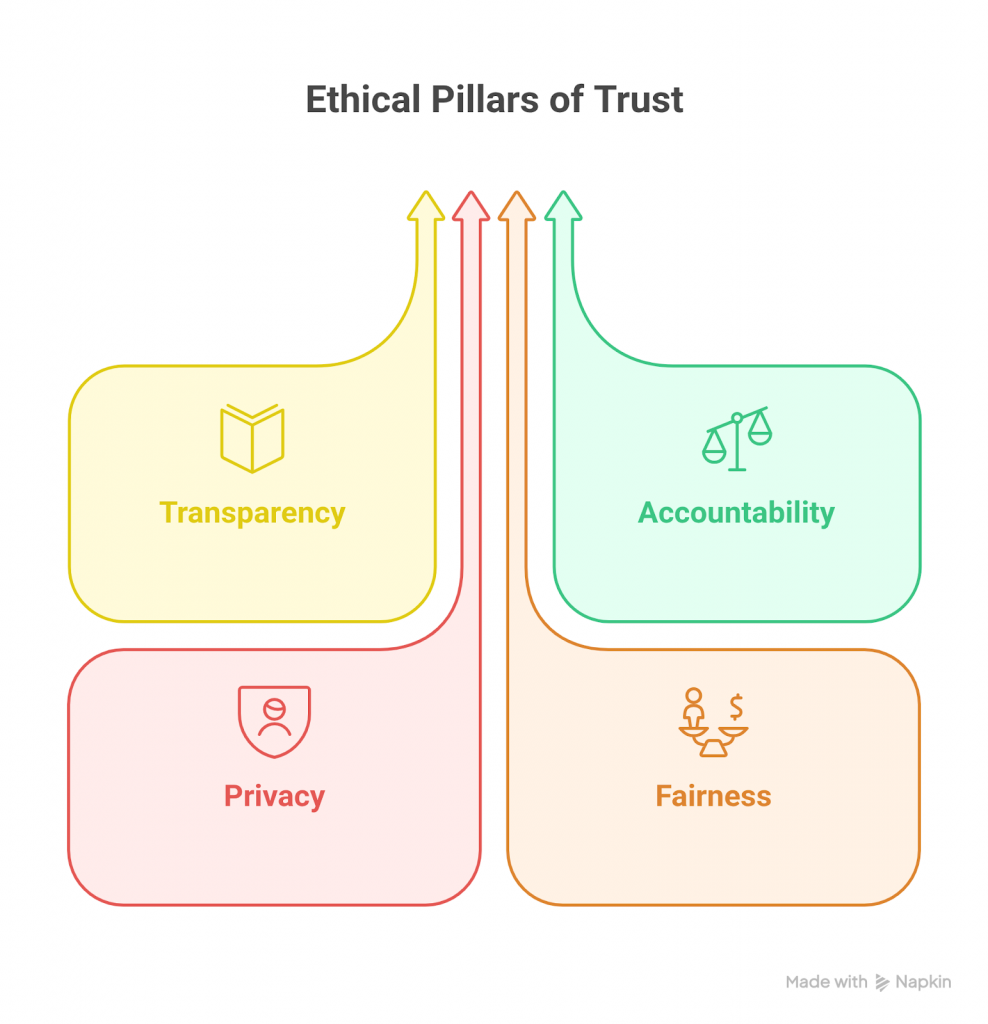

Key Principles of Responsible AI Usage

Using ChatGPT smartly means being responsible and mindful of its abilities. It begins with some main ideas.

First is transparency. If you’re using AI to help create content, whether it’s a customer email, a report, or even internal messaging, it’s essential to be upfront about it. People deserve to know when they’re interacting with something generated or assisted by a machine.

Then comes accountability. ChatGPT can be helpful, but it shouldn’t replace human judgment. Anything the tool produces should be reviewed, fact-checked, and ultimately approved by someone who understands the content’s context and consequences.

Privacy and data security are also non-negotiable. Teams should avoid putting sensitive information into prompts, like customer data, internal plans, or confidential files. Even if it seems harmless, once that data is out, you can’t always control where it goes.

Finally, keep fairness and bias in mind. The model can sometimes reflect hidden biases from the data it was trained on. That means being thoughtful about tone, language, and assumptions, and being willing to push back or rework results when something doesn’t feel right.

These principles set the foundation for safe, respectful, and innovative use.

Training Essentials for Teams

Understanding the Tool

Before using ChatGPT, people should know its strengths and weaknesses. ChatGPT can write texts, shorten documents, produce ideas, and support the thinking process.

However, it doesn’t have the same understanding as people do, and it may sometimes be wrong. It cannot understand what is going on right now and is not always aware that it has made a mistake.

Practice should focus on the point that ChatGPT is a tool for writing, not a source of answers or someone who can make crucial decisions. Human judgment should be used in addition to machines, instead of replacing it.

When helping teams understand what ChatGPT can and can’t do, it’s useful to offer structured learning. For example, this free course on ChatGPT for Business Communication gives professionals a clear foundation on using the tool effectively in everyday workplace scenarios.

Promoting Critical Thinking

We should remember not to accept the results given by AI simply. Making a bold statement doesn’t guarantee that it is true. Prompt the teams to take their time and read through the instructions.

Does the content make sense? Are the facts correct? Is the tone appropriate? Verification matters, especially when using AI-generated content in customer communications, public materials, or anything that carries risk.

Treat ChatGPT’s responses as drafts or starting points, not final answers. Teams should feel comfortable challenging the results, editing them, or tossing them out if they don’t meet the mark.

Safe Input Practices

Good output starts with safe input. Teams should never enter private, personal, or sensitive information into ChatGPT. That includes client names, employee data, financial details, passwords, or internal documents.

A good rule of thumb: if you wouldn’t email it to someone outside your organization, don’t paste it into ChatGPT. Use anonymized or general data when experimenting or prompting.

It’s also smart to remind teams that while ChatGPT can simulate human conversation, it’s still a machine and shouldn’t be treated like a secure, private workspace.

Use Case Guidelines

Clear boundaries go a long way in building trust. Teams should know what ChatGPT can be used for and where the line is. Approved use cases include writing outlines, drafting basic emails, or summarizing non-sensitive information.

Prohibited uses could include generating legal advice, replacing regulated communications, or handling confidential client data.Making a basic list of good and bad habits can ensure everyone understands how to use the tool safely.

Ensure that the guidelines are made available to all staff and that you update them whenever your company’s needs change.

Developing Internal Guidelines and Policies

Organizations need more than good intentions to use ChatGPT responsibly at scale. They need clear, practical guidelines everyone can follow.

- Start by developing contextual rules that reflect your industry, data sensitivity, and internal workflows.

For example, a marketing team might use ChatGPT to draft blog posts, while a legal team should avoid using it for contract language. The rules should match the risks and realities of each department.

- Consistency is key. Without it, teams may develop their own informal practices- some safe, others not.

A shared set of expectations helps keep everyone on the same page and reduces the risk of misuse. This can include naming approved tools, defining acceptable use cases, and flagging high-risk scenarios.

- It also helps to create simple, repeatable templates and examples. Show employees what a safe prompt looks like. Outline when content needs to be reviewed by a manager or the compliance team.

For instance: “Use AI for brainstorming, but all customer-facing copy must be reviewed before publishing.”

- When policies are clear and easy to apply, they’re more likely to be followed. These internal guardrails turn responsible AI use from a concept into a consistent, day-to-day practice.

For those shaping internal AI use policies or training frameworks, deeper learning helps. Programs like the AI & Machine Learning Program by Great Lakes offer essential insights into how these tools function, helping leaders set realistic, secure standards for use.

Continuous Learning and Improvement

Technology doesn’t stand still, nor should your team’s approach to using tools like ChatGPT. As new features roll out or the company’s needs shift, it’s essential to keep learning and adjusting.

Regular learning opportunities help employees keep pace with AI changes and improve their skills. Courses like ChatGPT for Working Professionals are great for this—they’re designed to fit around busy schedules while offering practical, hands-on applications.

Make space for regular check-ins. Ask teams what’s working, where they’re running into trouble, and what could be improved. These insights can help you fine-tune training, update guidelines, or catch blind spots early.

Occasional spot checks on how the tool is used, nothing heavy-handed, can also help surface issues before they become real problems.

Equally valuable is encouraging teams to swap tips for using Chat GPT. A simple Slack thread or lunch-and-learn can go a long way. When people share how they’re using ChatGPT well, others benefit, and the overall quality of work improves.

This isn’t about mastering the tool once and forgetting it. It’s about keeping the conversation open, staying curious, and helping everyone improve over time.

Giving teams the right tools makes responsible use much easier.

- Start with training modules that walk through do’s and don’ts, paired with easy-to-read internal documentation or wikis for quick reference.

If your organization uses monitoring tools, these can help track usage patterns and flag potential risks.

- OpenAI and other providers also offer policy templates, prompt guidelines, and safety resources that can be adapted to fit your needs.

Point teams to these materials as part of onboarding or regular training. When support is easy to access, people are far more likely to use the tool thoughtfully and within safe boundaries.

To support learning, consider pointing teams to well-designed courses or internal upskilling options. Programs like the Generative AI course can deepen understanding, especially for roles that are shaping internal policies or workflows around AI.

For those looking for ongoing, flexible access to professional upskilling, we’ve recently launched Academy Pro, a subscription plan that unlocks all our premium courses for just ₹799/month.

With this, learners no longer need to purchase individual courses, making it easier and more affordable for teams to continuously build their AI capabilities and stay updated with the latest responsible usage practices

Conclusion

ChatGPT and similar tools are becoming part of the everyday toolkit at work. But like any tool, how it’s used makes all the difference. With a bit of structure and clear training, teams can use it well without risking sensitive data, reputation, or trust.

Leaders have an important role in making that happen. Investing in training and setting down some simple, practical guidelines helps everyone stay on the same page.

If you haven’t already, now’s a great time to review or create internal policies. A few thoughtful steps today can save a lot of trouble down the road.

![[In-Depth Guide] The Complete CTGAN + SDV Pipeline for High-Fidelity Synthetic Data [In-Depth Guide] The Complete CTGAN + SDV Pipeline for High-Fidelity Synthetic Data](https://i3.wp.com/www.marktechpost.com/wp-content/uploads/2026/02/blog-banner23-22.png?ssl=1)

![How to Explain Reasons for Job Change in Interviews? [2024]](https://metaailabs.com/wp-content/uploads/2024/04/How-to-Explain-Reasons-for-Job-Change-in-Interviews-2024.png)