Rednote releases its first open-source LLM with a Mixture-of-Experts architecture

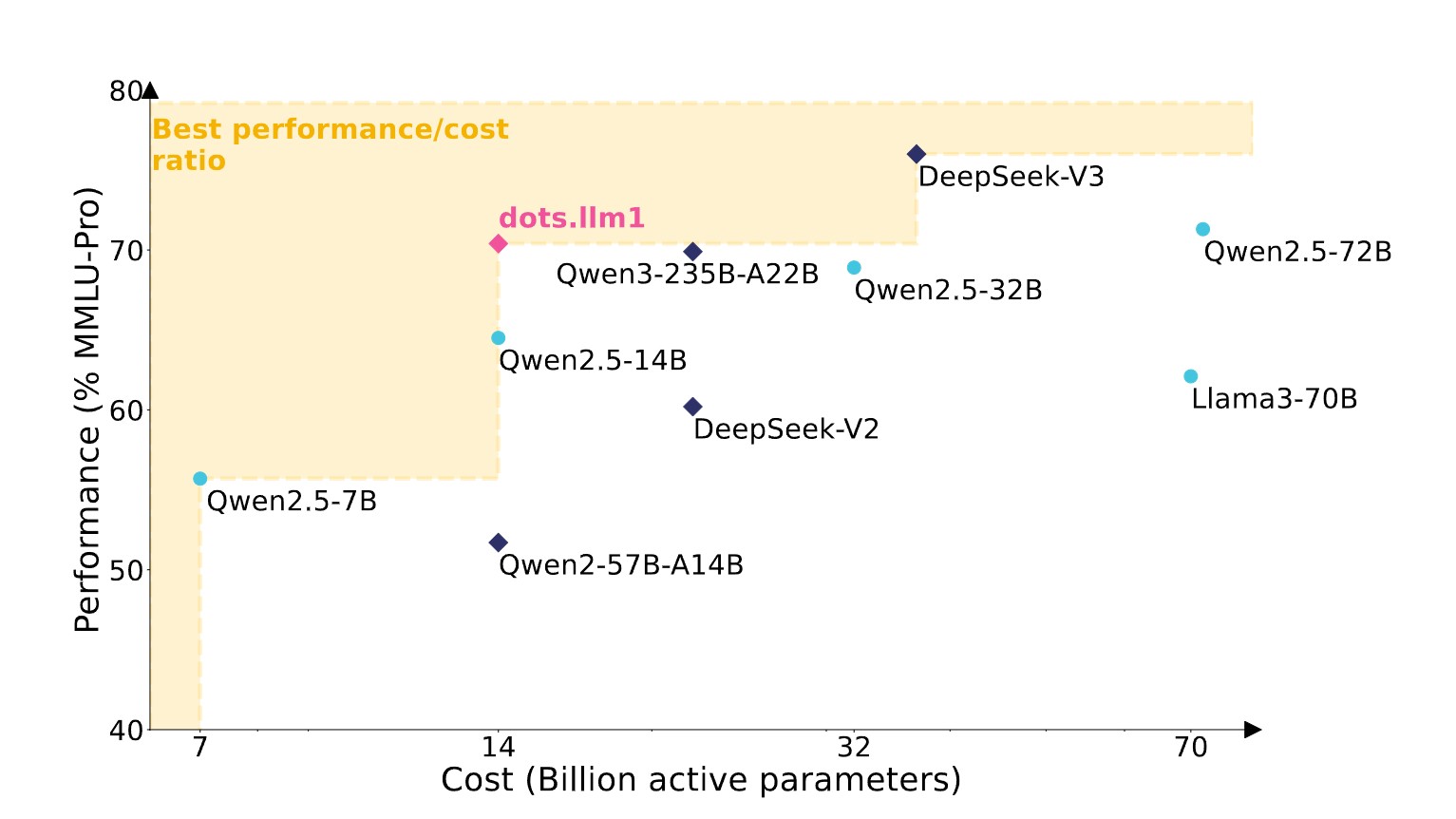

Social media company Rednote has released its first open-source large language model. The Mixture-of-Experts (MoE) system, called dots.llm1, is designed to match the performance of competing models at a fraction of the cost.

According to Rednote’s technical report, dots.llm1 uses 14 billion active parameters out of a total 142 billion. The MoE architecture splits the model into 128 specialized expert modules, but only the best six are activated for each token, along with two that are always on. This selective approach is meant to save compute resources without sacrificing quality.

Rednote claims significant efficiency gains. Training dots.llm1 on one trillion tokens required just 130,000 GPU hours, compared to 340,000 for Qwen2.5-72B. Overall, the full pre-training process took 1.46 million GPU hours for dots.llm1, while Qwen2.5-72B needed 6.12 million – roughly four times more. Despite this, Rednote says the models deliver similar results.

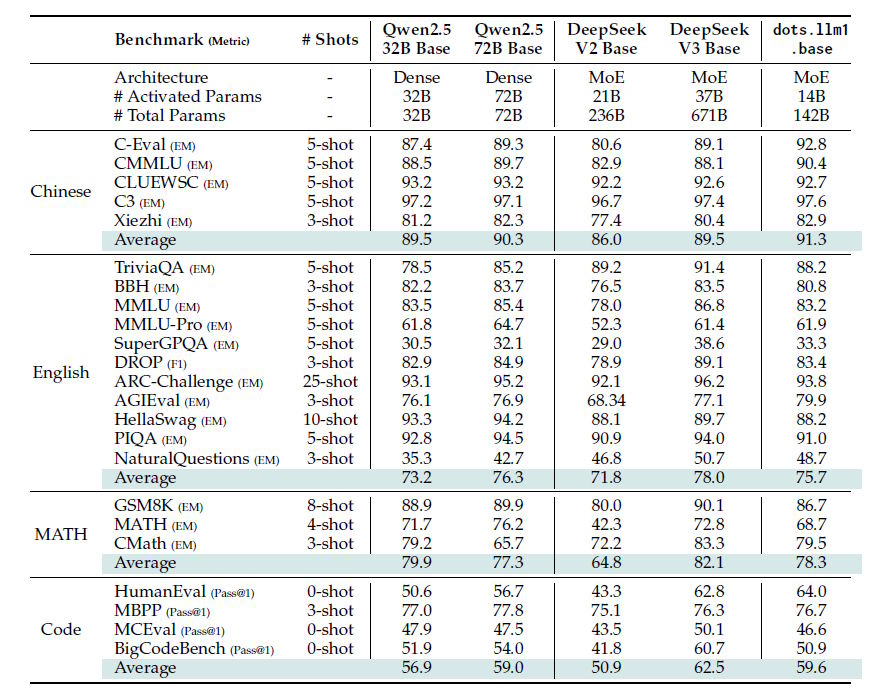

Benchmarks show dots.llm1 performs especially well on Chinese language tasks. In tests like C-Eval (which measures comprehensive Chinese knowledge) and CMMLU (a Chinese variant of MMLU), the model beats both Qwen2.5-72B and Deepseek-V3.

Ad

On English-language benchmarks, dots.llm1 falls a bit behind top competitors. On MMLU and the more challenging MMLU-Pro, which test general knowledge and reasoning, the model lags slightly behind Qwen2.5-72B.

For math, dots.llm1 posts solid results but typically trails the largest models. Its code generation, however, is a standout: on HumanEval, a standard programming benchmark, dots.llm1 beats Qwen2.5-72B, and holds its own or comes close on other coding tasks.

Training data: real, not synthetic

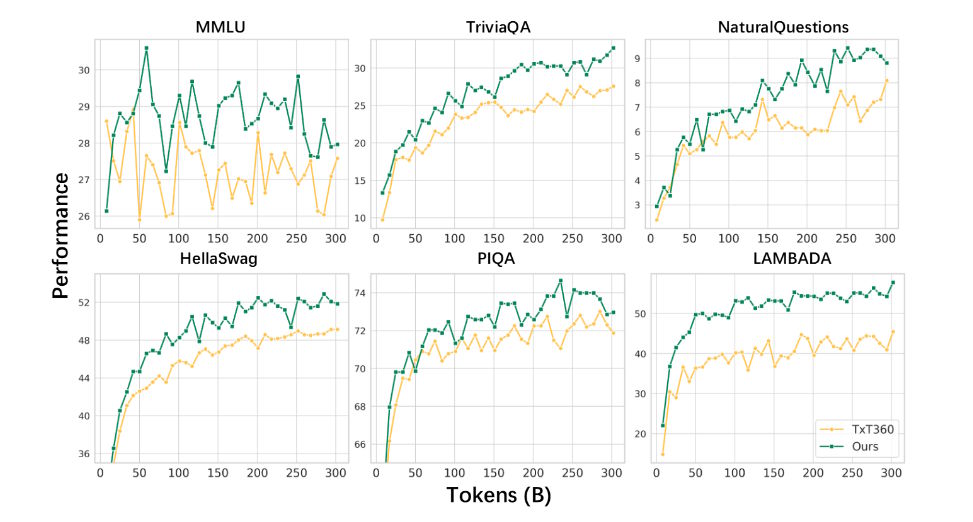

Rednote trained the model on 11.2 trillion high-quality tokens, using only real internet text and no synthetic data. The data pipeline follows three steps: document preparation, rule-based filtering, and model-based processing. Two innovations stand out: a system that strips out distracting website elements like ads and navigation bars, and automated content categorization.

The company built a 200-category classifier to optimize the mix of training data. This allowed them to increase the share of factual and knowledge-based content (like encyclopedia entries and science articles) while reducing fiction and highly structured web pages such as product listings.

Open Source and global ambitions

Rednote is releasing intermediate checkpoints after every trillion tokens of training, giving the research community insight into large model training dynamics. The models are available on Hugging Face under the Apache 2.0 license, with source code on GitHub.

Recommendation

With 300 million monthly users, Rednote is jumping into a crowded Chinese AI market led by companies like Alibaba, Baidu, Tencent, Bytedance, and the upstart Deepseek. The new model comes from Rednote’s Humane Intelligence Lab, which spun out of the company’s AI team and is now hiring more researchers with backgrounds in the humanities.

Rednote is already piloting an AI research assistant called Diandian on its platform, powered by its own model.

The social media app briefly made international headlines this spring as a possible refuge for US users during the threatened TikTok ban. When the ban was reversed, interest outside China faded.

Still, Rednote opened its first office outside mainland China in Hong Kong on June 7 and has plans for further international growth. According to Bloomberg, its valuation hit $26 billion this year, surpassing its pandemic-era peak, with an IPO expected later in 2025.

.png?w=960&resize=960,517&ssl=1)