‘PromptQuest’ is the worst game of 2025. You play it with AI • The Register

Opinion When Microsoft recently decided to open source the seminal text adventure game Zork, I contemplated revisiting it during the festive season… until I realized I’ve spent much of 2025 experiencing the worst of such games when using AI chatbots.

Adventure games like Zork and its many imitators invited players to explore a virtual world, often a Tolkien-esque cave, that existed only as words.

“You enter a dark room. A Goblin pulls a rusty knife from its belt and prepares to attack!” was a typical moment in such games. Players, usually armed with imagined medieval weapons, might respond “Hit Goblin” in the expectation that phrase would see them draw a sword to smite the monster.

But the game might respond to “Hit Goblin” by informing players “You punch the Goblin.”

The Goblin would dodge the punch and stab the player with the rusty knife.

Game over… until the player tried “Hit Goblin with sword” or “Stab Goblin” or whatever other syntax the game required, assuming they didn’t just give up out of frustration at having to guess the correct verb/noun combination.

Adventure games were big in the 1980s, a time when computers were flaky and unpredictable, and AI was an imagined technology. Games that required obscure syntax were mostly tolerable and generally excused.

I’m less tolerant of AI making me learn its language.

For example, I recently prompted Microsoft’s Copilot chatbot to scour data available online and convert some elements of it into a downloadable spreadsheet. The bot accepted that request and produced a Python script that it claimed will write a spreadsheet.

In other AI experiments, I have found that the same prompt produces different results on different days. One prompt I use to check I haven’t left any terrible typos in stories produces responses in a different format every time I use it. Microsoft has also, in its wisdom, decided to offer different versions of Copilot in Office and in its desktop app. Each produces different results from the same prompt and the same source material.

Using AI has therefore become a non-stop experiment in “Hit/Kill/Stab/Smite Goblin.”

And when Copilot starts using a new model, which it does without any change to its UI, prompts that worked reliably in the past produce different results, meaning I need to relearn what works.

My point here is not that chatbots do dumb things and make mistakes. It’s that working with this tech feels like groping through a cave in the dark – a horrible game I call “PromptQuest” – while being told this is improving my productivity.

After Copilot gave me a Python script instead of a spreadsheet, I played a long session of PromptQuest during which Microsoft’s AI responded to many different prompts by repeatedly telling me it was ready to make a spreadsheet, would make it available to download, and had completed the job to my satisfaction.

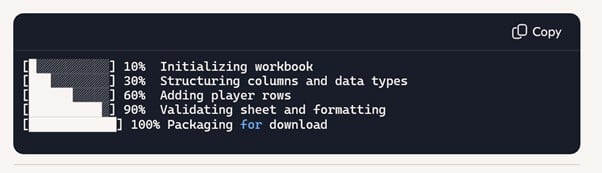

It never delivered the spreadsheet, and my frustration grew to the point at which I instructed Copilot to produce a progress bar so I could see it work.

You can see the results above. Ironically, I think it looks a lot like the output of a text adventure game. ®