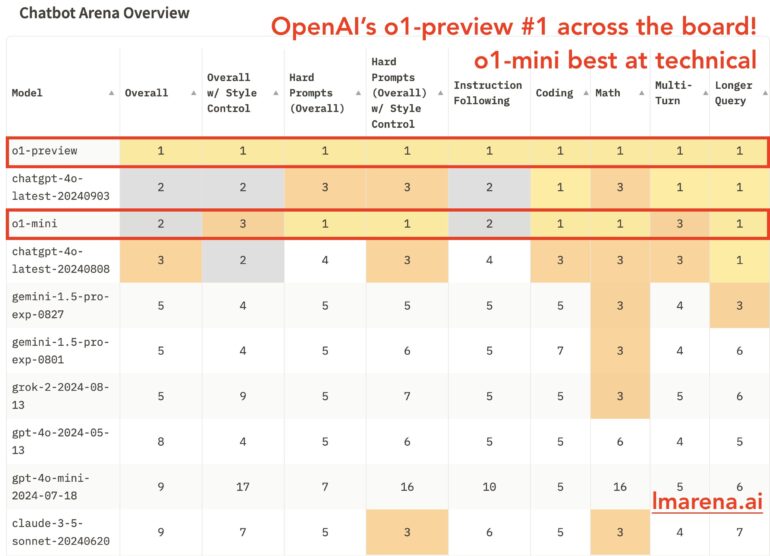

OpenAI o1-preview and o1-mini beat the competition

OpenAI’s new AI models o1-preview and o1-mini have achieved top scores in chatbot rankings, though the low number of ratings could skew the results.

According to a published overview, o1-preview took first place across all evaluated categories, including overall performance, safety, and technical capabilities. O1-mini, which specializes in STEM tasks, briefly shared second place overall with a GPT-4o version released in early September and leads in technical areas.

The Chatbot Arena, a platform for comparing AI models, evaluated the new OpenAI systems using over 6,000 community ratings. This showed o1-preview and o1-mini excelling especially in math tasks, complex prompts, and programming.

However, o1-preview and o1-mini have received far fewer votes than established models like GPT-4o or Anthropic’s Claude 3.5, with just under 3,000 reviews each. This small sample size could distort the evaluation and limit the significance of the results.

Ad

OpenAI’s o1 shows strong performance in math and coding

O1 aims to set a new standard for AI reasoning by “thinking” longer before answering. However, the o1 models don’t outperform GPT-4o in all areas. Many tasks don’t require complex logical reasoning, and sometimes a quick response from GPT-4o is sufficient.

A chart from Lmsys on model strength in mathematics clearly shows o1-preview and o1-mini scoring over 1360, well above other models’ performance.