Google’s Gemini AI inches closer to becoming a virtual agent with multi-app integration

Google is rolling out several updates to its Gemini AI assistant for Android, focusing on how it handles multimedia, works with other apps, and becomes more accessible.

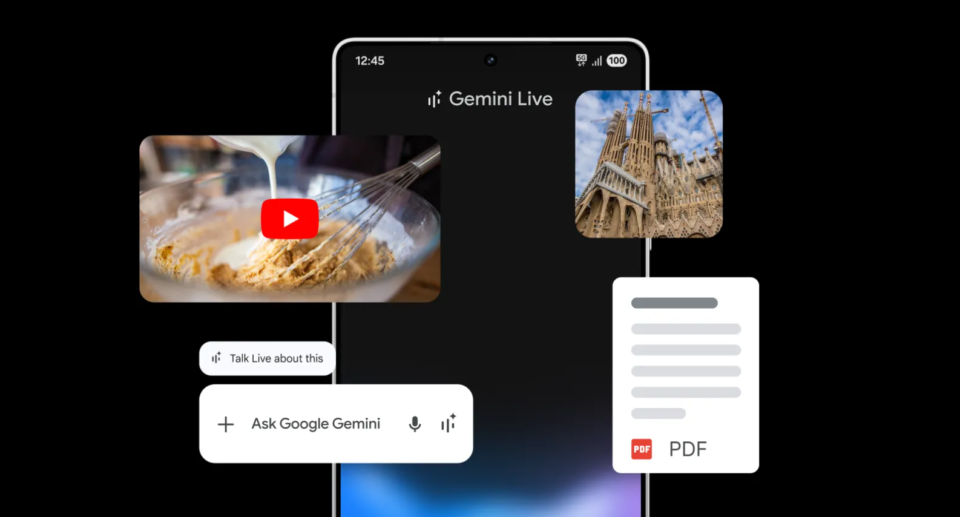

The biggest addition is Gemini Live, which lets users include images, files, and YouTube videos in their conversations with the chatbot. Users can now ask Gemini to analyze photos and provide feedback, though this feature is currently limited to Samsung’s Galaxy S24 and S25 phones and Google’s Pixel 9 devices. Google says other Android phones will get these features in the coming weeks.

Video: Google

In the months ahead, Google plans to introduce Project Astra, bringing screen sharing and live video streaming capabilities to Gemini, similar to what OpenAI already offers with ChatGPT’s live video support. These features will debut on the Gemini Android app and Samsung Galaxy S25.

Ad

Gemini gets more versatile

Gemini now works with a wider range of apps through what Google calls “extensions.” While it already connected to services like YouTube, Google Maps, Gmail and Spotify, the assistant can now control Samsung apps on the Galaxy S25, including Calendar, Notes, Reminder, and Clock.

One of the most useful new features lets users combine multiple extensions in a single prompt. For example, you can ask Gemini to find recipes and save them directly to Samsung Notes or Google Keep.

In one demonstration, Google showed how Gemini could search for events and send them to someone through a message – a glimpse of how it’s becoming more like a personal AI assistant. This works whether you’re using Gemini on the web, Android, or iOS.

Video: Google

Galaxy S25 users will soon be able to launch Gemini with a long press of the side button, taking over from Samsung’s Bixby assistant. For those using Gemini Advanced, the mobile app will include the new Deep Research feature for (maybe) more thorough research capabilities.

Recommendation