Google’s Gemini 2.5 Pro beats OpenAI’s o3 model in processing complex, lengthy texts

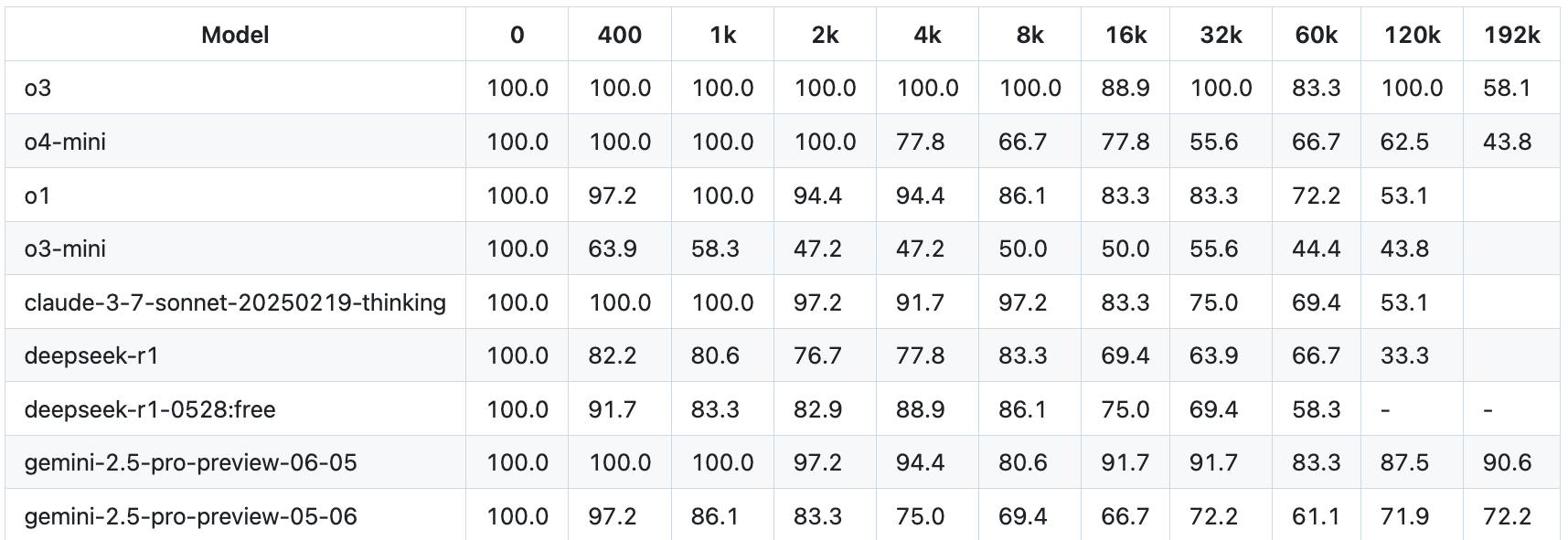

Google’s Gemini 2.5 Pro currently leads the Fiction.Live benchmark for processing complex, lengthy texts.

The test measures how well language models can understand and accurately reproduce intricate stories and contexts—tasks that go far beyond simple search functions like those evaluated in the popular “Needle in the Haystack” test.

According to Fiction.Live, OpenAI’s o3 model delivers similar performance to Gemini 2.5 Pro up to a context window of 128,000 tokens (about 96,000 words). But at 192,000 tokens (roughly 144,000 words), o3’s performance drops off sharply. Gemini 2.5 Pro’s June preview (preview-06-05) remains stable at that length.

Still, the tested context sizes are far below the one million tokens that Google advertises as Gemini 2.5 Pro’s maximum. As the window grows, Gemini’s accuracy is also likely to decrease. For comparison, OpenAI’s o3 model currently tops out at a 200,000-token context window.

Ad

Meta, for example, promotes a context window of up to ten million tokens for Llama 4 Maverick. In practice, the model struggles with complex long-context tasks, ignoring too much information to be useful.

Quality over Quantity: Deepmind researcher warns against context bloat

Larger context windows, even when models make better use of them, don’t automatically deliver better results. As Nikolay Savinov of Google DeepMind recently pointed out, language models run into a basic “shit in, shit out” problem when handling large numbers of tokens.

According to Savinov, giving more attention to one token inevitably means less attention for others, creating a distribution issue that can hurt overall performance.

Savinov recommends avoiding irrelevant information in the context whenever possible. While researchers are working on new models to address this, he suggests that for now, the best approach is to be selective.

Recent studies also show that AI models still have trouble reasoning over long contexts. In practice, this means that even when a language model can handle large documents like lengthy PDFs, users should still remove unnecessary pages beforehand, such as introductory sections that aren’t relevant to the specific task.

Recommendation