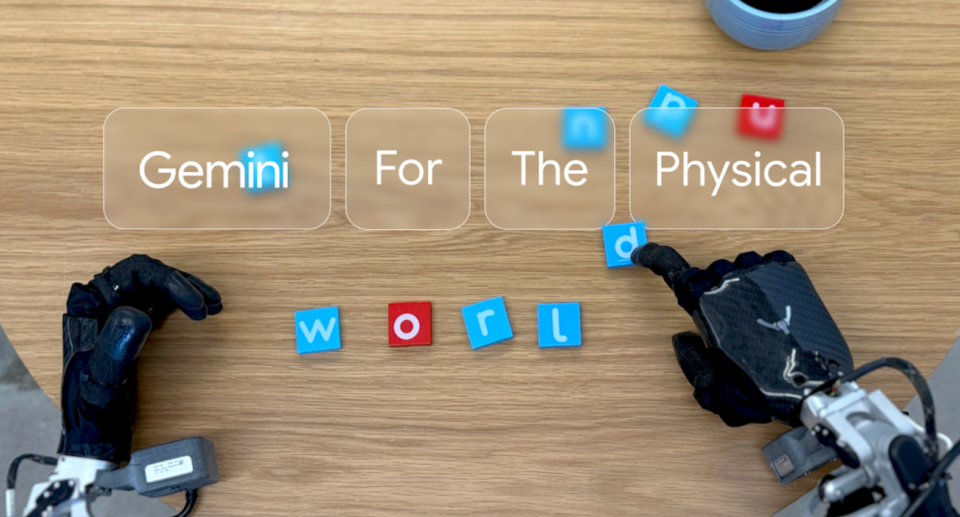

Google Deepmind unveils new AI models for robotic control

Google Deepmind has developed two new AI models that enhance how robots interact with the physical world. Both systems build on the capabilities of Gemini 2.0.

The first model, Gemini Robotics, functions as an advanced Vision-Language-Action (VLA) model designed specifically for direct robot control. Built on Gemini 2.0’s foundation, it processes and responds to natural language commands in multiple languages.

The system bridges the gap between digital AI capabilities and physical-world interactions. In testing, Gemini Robotics showed it can handle completely unfamiliar situations, objects, and environments not included in its training data.

The system continuously monitors its environment, making instant adjustments when challenges arise – whether an object slips from its grasp or someone rearranges items in its workspace. In head-to-head testing against leading models, Google Deepmind reports that Gemini Robotics more than doubled their performance on generalization tasks. The system demonstrates sophisticated control through complex tasks like folding origami and packing snacks into Ziploc bags.

Ad

While the system learned most of its skills on the bi-arm ALOHA 2 robot platform, it can control various robot types, including the Franka arm systems commonly used in academic research labs.

Advancing spatial reasoning capabilities

The second model, Gemini Robotics-ER, enhances these capabilities with advanced spatial understanding. It combines spatial awareness with programming skills to create new functions in real time. For example, when encountering a coffee mug, the system can calculate precisely how to grip the handle with two fingers and determine the safest approach path. Google Deepmind reports that Robotics-ER succeeds at robot control tasks two to three times more often than standard Gemini 2.0.

To govern robot behavior, Google Deepmind has developed a framework using data-driven “constitutions” – sets of rules written in plain language. The company also released the ASIMOV dataset to help researchers evaluate the safety of robotic actions in real-world situations.

The development involves several key partnerships: Apptronik contributes expertise in humanoid robots, while Boston Dynamics and Agility Robots serve as testing partners for Gemini Robotics-ER.