GenEx tries to teach AI to imagine what’s around the corner

Researchers at Johns Hopkins University have developed an AI system that can generate a fully explorable 3D environment from a single photo.

The system, called GenEx, could help robots and AI agents better understand and navigate complex situations by letting them “imagine” what lies beyond their immediate view. Think of it as giving machines a form of imagination – the ability to picture what might be around the next corner.

Learning from Video Games

Instead of using real-world photos, the team trained GenEx using virtual environments from game engines like Unreal Engine 5 and Unity. This let them efficiently collect rich, diverse training data.

The training process uses cubemaps – a way of projecting 360-degree views onto six squares that form a cube. The team gathered predefined exploration paths through these virtual worlds, systematically scanning different movement directions to build a comprehensive dataset. This helped GenEx learn to create seamless transitions between different viewpoints.

Ad

According to the researchers, the results are impressive. Even when “exploring” up to 20 meters in the generated environments, the images remain stable and coherent. Standard quality metrics show low error rates, suggesting the system produces highly realistic visualizations.

GenEx can generate bird’s-eye views by moving along the vertical axis, giving AI agents a broader view of their surroundings – kind of like having a drone’s perspective without needing the drone.

The system is also surprisingly good at creating multi-view videos of objects. While other open-source models struggle with this task, GenEx maintains consistent backgrounds and realistic lighting throughout the sequence, the researchers say.

Perhaps most impressively, GenEx can help with something called active 3D mapping. As an AI agent explores the generated environment, it builds a three-dimensional map of everything it “sees,” similar to how autonomous vehicles build maps of their surroundings – except this all happens in GenEx’s imagined space rather than the real world.

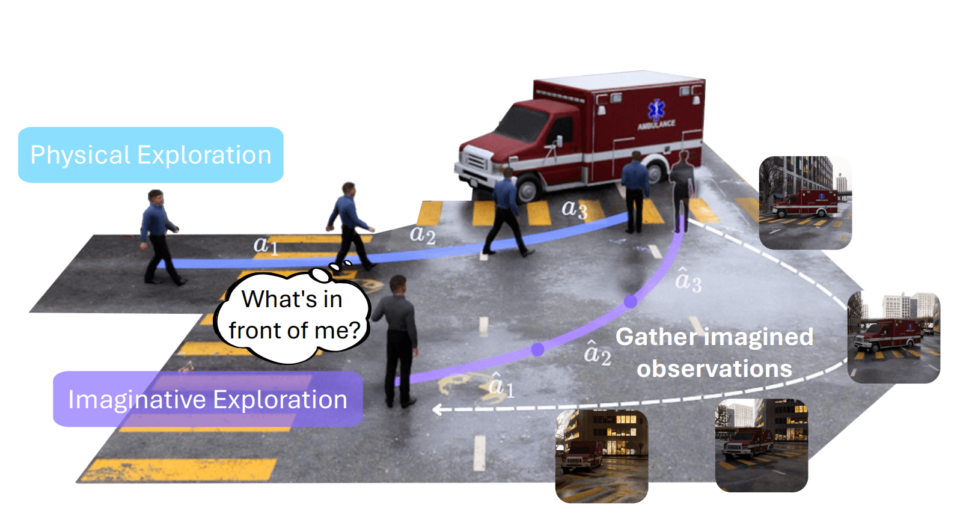

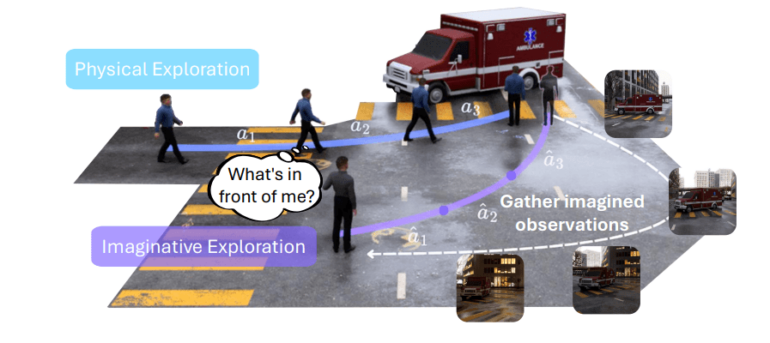

“Imaginative Exploration” helps AI make better decisions

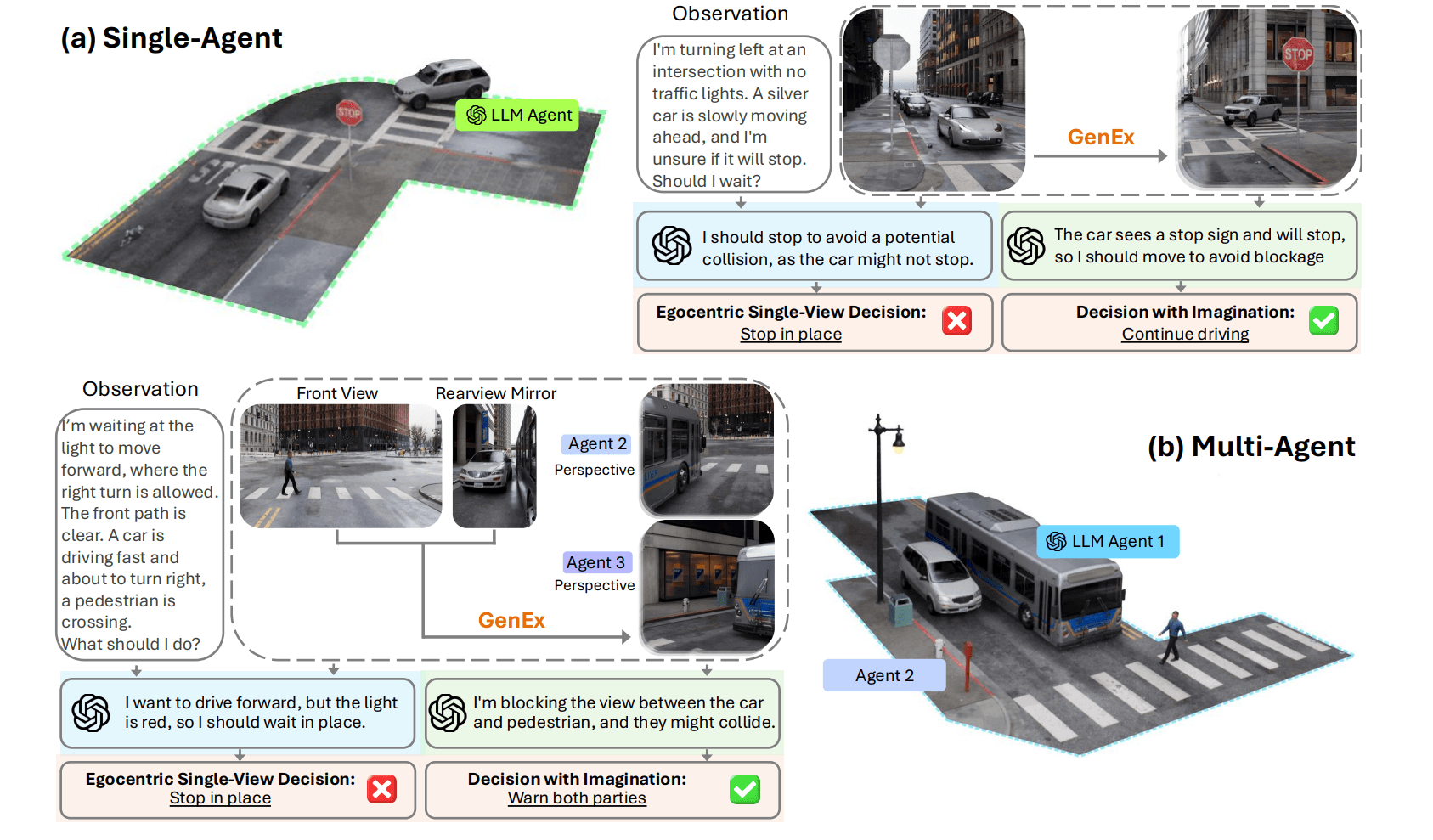

But perhaps the most interesting application is in AI decision-making. The researchers demonstrated this with two traffic scenarios:

Recommendation

In the first case, an AI agent approaching an unmarked intersection sees a silver car coming from the front. With just one image, the agent would stop to be safe. But using GenEx to explore different viewpoints, it can spot a stop sign facing the other car and decide to keep driving to prevent traffic backup.

In another scenario, an agent waiting at a red light needs to decide whether to make a right turn, complicated by an approaching car and a crossing pedestrian. Using GenEx to explore multiple viewpoints, the agent realizes it’s blocking the line of sight between the car and pedestrian. Instead of just waiting, it decides to warn both parties of the potential danger.

The researchers compare this to human imagination – we don’t need to physically walk around a fire truck to know it’s probably blocking the entire road, or circle a stop sign to know what’s on its back. GenEx gives AI agents similar imaginative capabilities.

When equipped with GenEx, a GPT-4o agent made correct decisions 85% of the time, compared to just 46% for an agent working from a single image. In multi-agent scenarios, the difference was even more dramatic: 95% accuracy with GenEx versus 22% without.

Still, the team acknowledges some limitations. Bridging the gap between imagined and real environments remains challenging. Future work will need to focus on adapting the system to real-world sensor data and dynamic conditions.