Gemini’s AI-powered “Deep Research” feature struggles with accuracy in early testing

Google has added a new “Deep Research” feature to Gemini Advanced that aims to enhance internet research capabilities through its AI assistant. Early testing shows both potential and significant limitations of this AI-powered research tool.

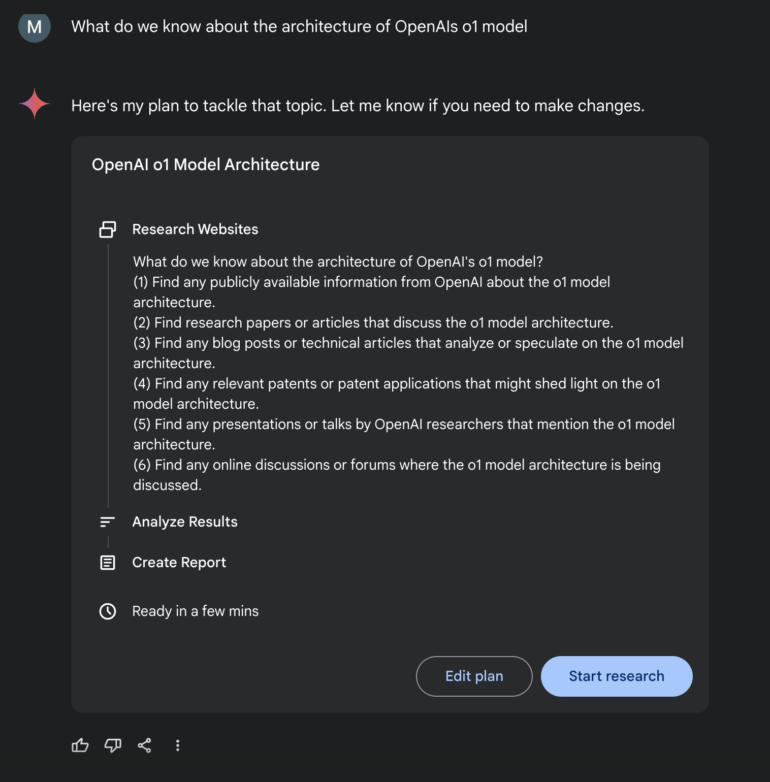

The new “Deep Research” feature, currently available for Gemini 1.5 Pro, first creates a research strategy that users can manually adjust. The system then searches the internet for relevant sources, analyzes them, and generates a report summarizing key topics with source citations. This positions Google’s assistant as an AI-powered research tool similar to Perplexity.

Testing reveals accuracy issues

In my test, I asked the system to collect information about OpenAI’s o1 model architecture. Gemini developed a six-step research plan that included searching for research papers, articles, patents, and OpenAI presentations.

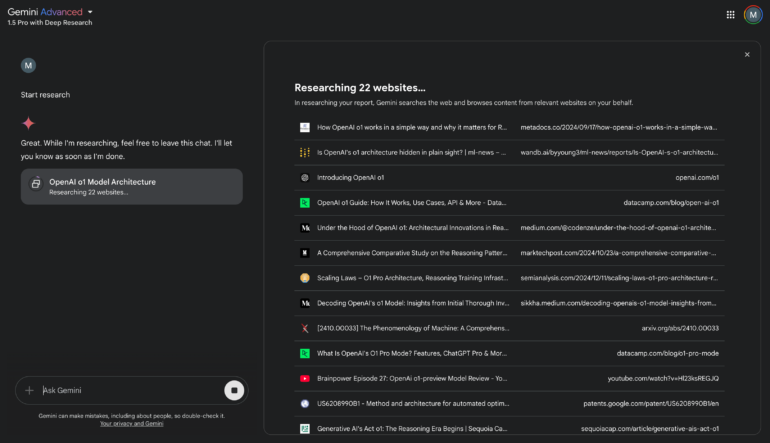

The system searched between 22 and 70 websites depending on the query and created a comprehensive report. While the source selection and general organization of known facts about the o1 model worked well, significant problems emerged in the details.

Ad

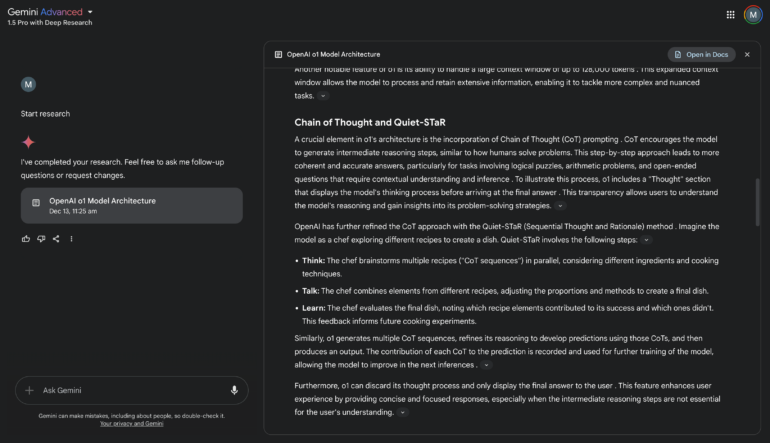

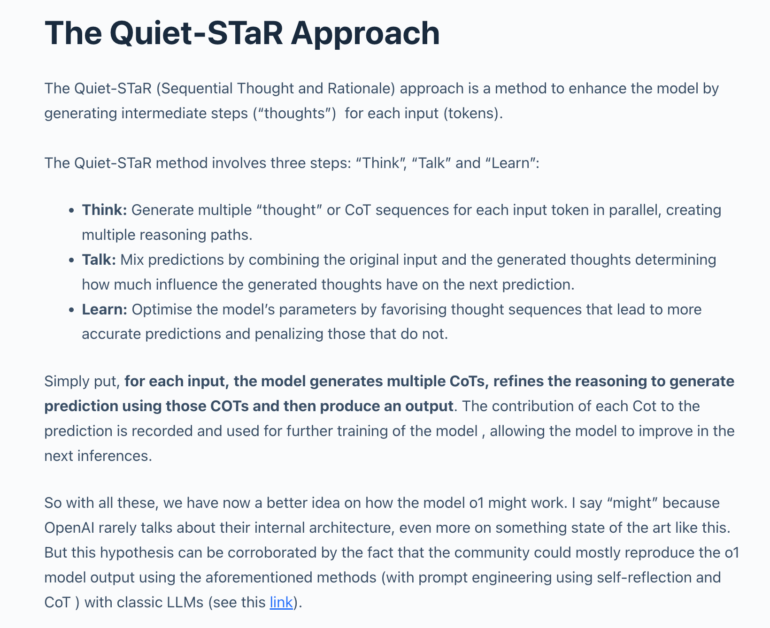

For example, the system incorrectly claimed that OpenAI o1 uses the Quiet-STaR method.

A check of the cited source revealed that Quiet-STaR was only discussed as a possible approach for better chain-of-thought training. The author explicitly emphasized these were merely speculations about how OpenAI trained o1.

Useful for gathering sources, problematic with details

Best practices for using these systems effectively are still missing. While language models can provide good support in certain areas, despite occasional inaccuracies, the test shows that AI research assistants are probably best used to help gather relevant sources and provide a – flawed – initial overview. However, users must always be aware that generated reports are very likely to contain misinformation, and the effort required for fact-checking may outweigh the benefits.

Google acknowledges this with a notice under the chat window: “Gemini can make mistakes, including about people, so double-check it.”

Deep Research is available to Gemini Advanced subscribers using Gemini 1.5 Pro.

Recommendation

![[In-Depth Guide] The Complete CTGAN + SDV Pipeline for High-Fidelity Synthetic Data [In-Depth Guide] The Complete CTGAN + SDV Pipeline for High-Fidelity Synthetic Data](https://i3.wp.com/www.marktechpost.com/wp-content/uploads/2026/02/blog-banner23-22.png?ssl=1)