Factorio joins growing list of video games doubling as AI benchmarking tools

Factorio, a complex computer game focused on construction and resource management, has become researchers’ latest tool for evaluating AI capabilities. The game tests language models’ ability to plan and build intricate systems while managing multiple resources and production chains.

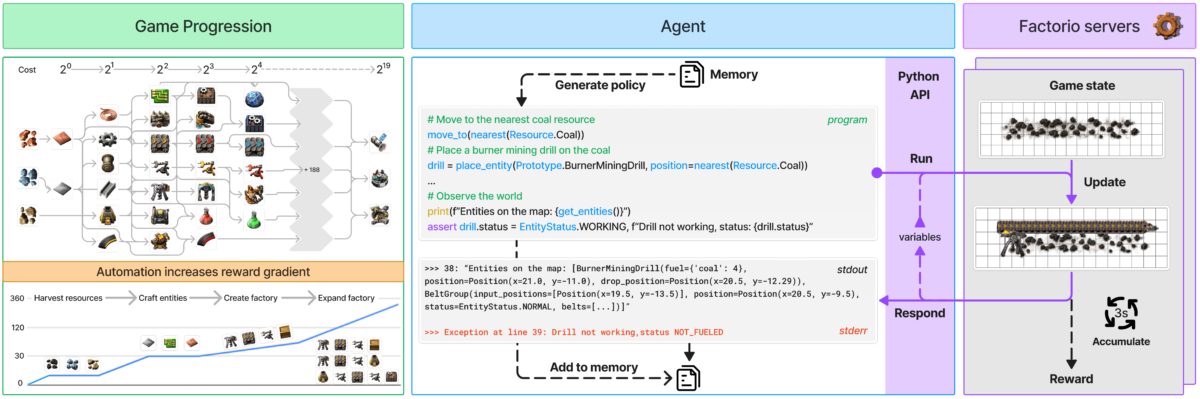

The Factorio Learning Environment (FLE) provides two distinct testing modes. “Lab-Play” features 24 structured challenges with specific goals and limited resources, ranging from simple two-machine builds to complex factories with nearly 100 machines. In “Open Play” mode, AI agents explore procedurally generated maps with one objective: build the largest possible factory.

The system operates through a Python API that lets agents generate code for actions and check game status. This setup tests language models’ ability to synthesize programs and handle complex systems. The API enables functions for placing and connecting components, managing resources, and monitoring production progress.

To measure success, the researchers evaluate agent performance using two key measurements: the “Production Score,” which calculates total output value and increases exponentially with production chain complexity, and “Milestones” that track important achievements like creating new items or researching technologies. The game’s economic simulation considers factors like resource scarcity, market prices, and production efficiency.

Ad

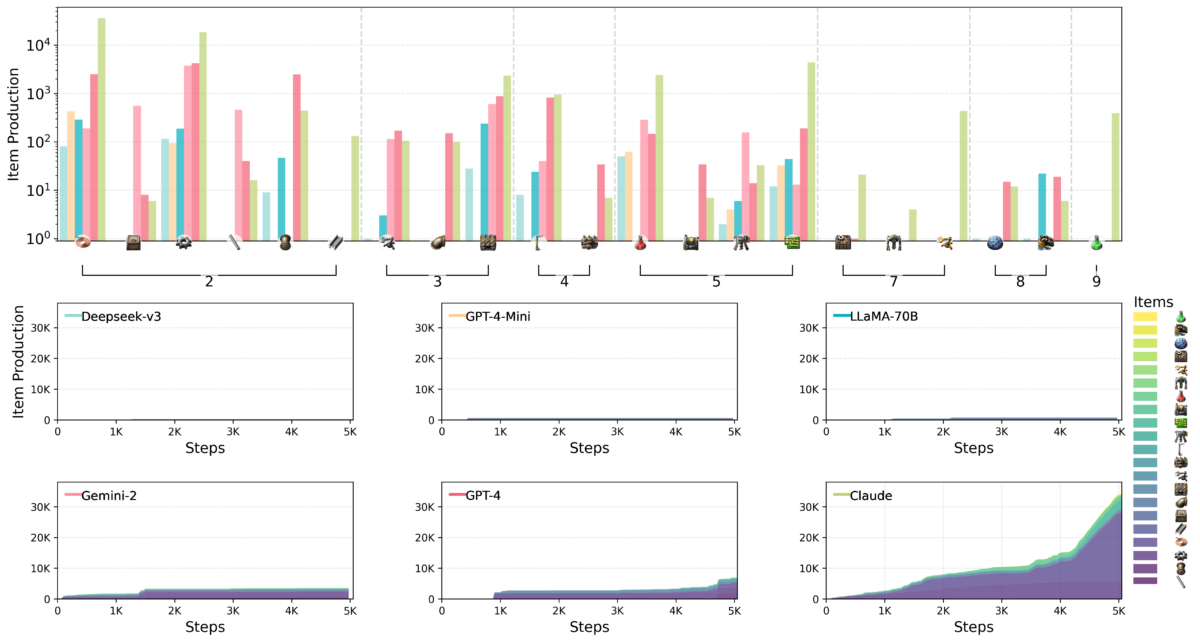

Claude 3.5 Sonnet leads the pack

The research team, which includes an Anthropic scientist, evaluated six leading language models in the FLE environment: Claude 3.5 Sonnet, GPT-4o and GPT-4o mini, DeepSeek-V3, Gemini 2.0 Flash, and Llama-3.3-70B-Instruct. Large Reasoning Models (LRMs) were not included in this round of testing, though previous benchmarks suggest models like o1 demonstrate superior planning capabilities, despite their own limitations.

Testing revealed significant challenges for the evaluated language models, particularly with spatial reasoning, long-term planning, and error correction. When building factories, the AI agents had difficulty efficiently arranging and connecting machines, resulting in suboptimal layouts and production bottlenecks.

Strategic thinking also proved challenging. The models consistently prioritized short-term goals over long-term planning. And while they could handle basic troubleshooting, they often faltered when faced with more complex problems and became trapped in inefficient debugging cycles.

Sonnet 3.5 tries to build the largest Factorio factory possible. | Video: Hopkins et al.

Among the tested models, Claude 3.5 Sonnet delivered the strongest performance, though it still couldn’t master all challenges. In the Lab Play mode, Claude successfully completed 15 of the 24 tasks, while competing models solved no more than 10. During Open Play testing, Claude reached a production score of 2,456 points, with GPT-4o following at 1,789 points.

Recommendation

Claude demonstrated sophisticated Factorio gameplay through its strategic approach to manufacturing and research. While other models remained focused on basic products, Claude quickly advanced to complex production processes. A notable example was its transition to electric drill technology, which led to a substantial increase in iron plate production rates.

The researchers suggest FLE’s open, scalable nature makes it valuable for testing future, potentially more capable language models. They note that reasoning models haven’t yet been evaluated, and propose expanding the environment to include multi-agent scenarios and human performance benchmarks for better context.

This work adds to a growing collection of game-based AI benchmarks, including the BALROG collection and the upcoming MCBench, which will test models using Minecraft buildings. Previous gaming AI milestones include OpenAI’s systems that have defeated professional human teams.

.jpg?ssl=1)