Alibaba’s QwQ-32B is an efficient reasoning model that rivals much larger AI systems

Alibaba’s latest AI model demonstrates how reinforcement learning can create efficient systems that match the capabilities of much larger models.

Despite having only 32 billion parameters, Alibaba’s new QwQ-32B model delivers remarkable performance. The company first introduced a preliminary version, QwQ-32B-Preview, in November 2024.

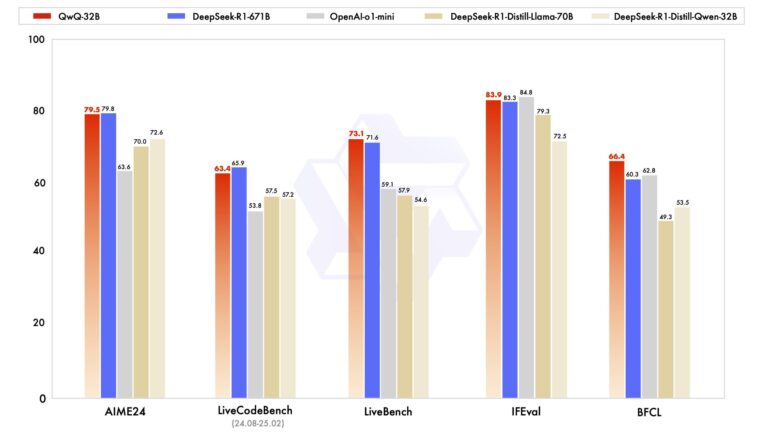

When tested on math, programming and general problem-solving skills, QwQ-32B achieved results comparable to DeepSeek’s much larger DeepSeek-R1, which uses 671 billion parameters.

Although DeepSeek-R1 uses a mixture-of-experts architecture that activates only 37 billion parameters during each run, it still demands significant graphics memory to operate. This efficiency makes QwQ-32B an attractive option for users who have less powerful hardware but still want high performance.

Ad

Reinforcement learning boosts math and coding performance

Alibaba’s researchers attribute QwQ-32B’s capabilities to their effective application of reinforcement learning on top of a foundation model pre-trained with extensive world knowledge. The model learns through interaction with human or machine judges, continuously improving based on received rewards.

The researchers implemented the training process in two distinct phases. The first phase focused on scaling reinforcement learning for math and programming tasks using an accuracy checker and code execution server.

In the second phase, they added another reinforcement learning stage focused on general capabilities like following instructions, aligning with human preferences, and agent performance. Through these agent-related capabilities, QwQ-32B can think critically, use tools, and adjust its conclusions based on feedback from its environment.

Alibaba has released QwQ-32B under the Apache 2.0 license as an open-weight model on Hugging Face and ModelScope. Users can access the model through Hugging Face Transformers, the Alibaba Cloud DashScope API, or test it directly through Qwen Chat.

Alibaba has positioned QwQ-32B within its broader AI strategy alongside the Qwen2.5 series, which includes specialized models for language, programming, mathematics, and the large-context Qwen2.5-Turbo. The company has announced a 50 billion euro investment in AI development and cloud infrastructure in February. This initiative supports China’s efforts to develop domestic processors for training large language models, reducing dependence on US companies like Nvidia.

Recommendation