AI-powered opinion manipulation emerges as the biggest threat of generative models, study finds

A new analysis outlines the tactics and motivations behind generative AI abuses from January 2023 to March 2024, providing a snapshot of the most significant threats.

Researchers from Google DeepMind, Jigsaw, and Google.org have published a qualitative study of 200 media reports on generative AI (GenAI) misuse over a 15-month period.

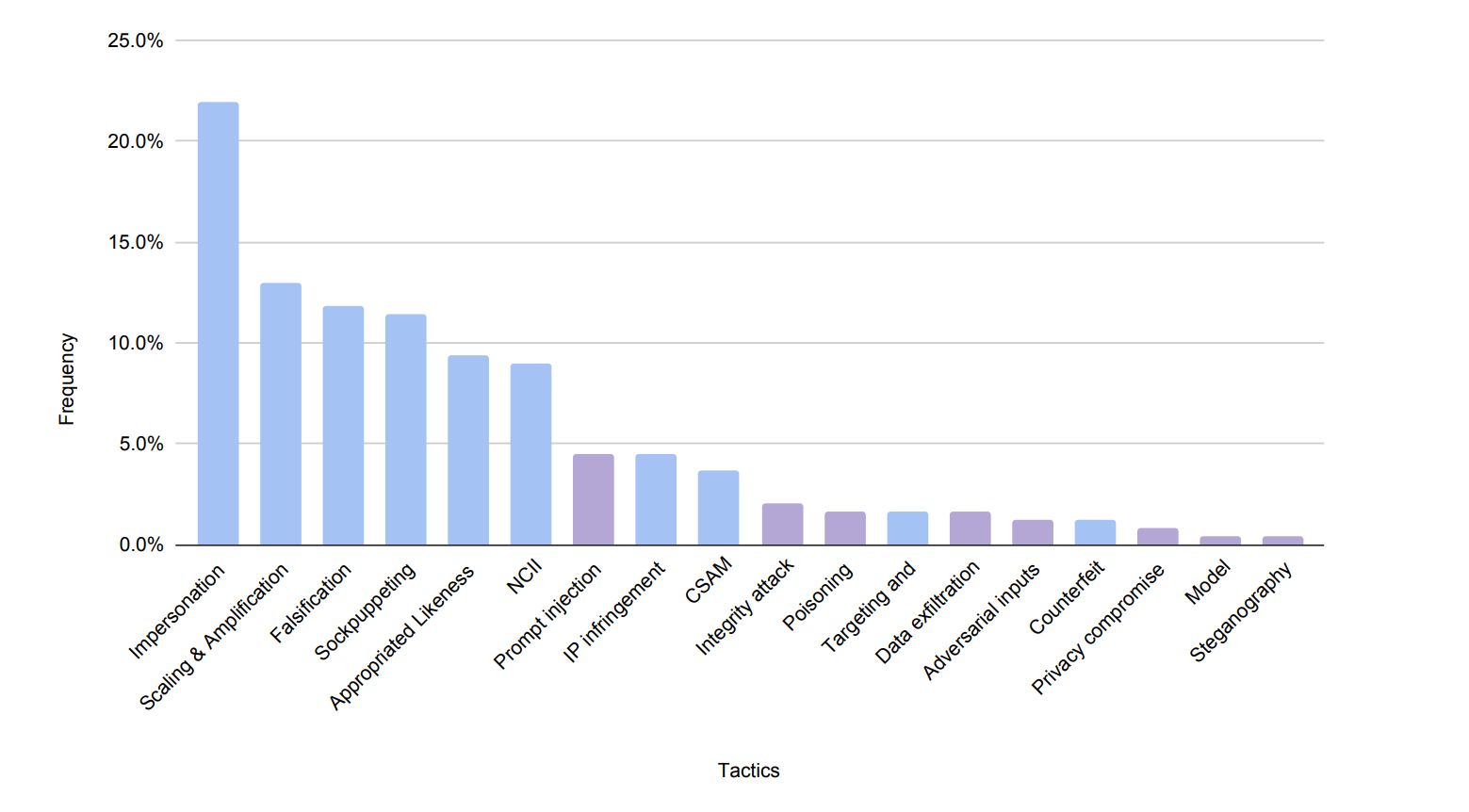

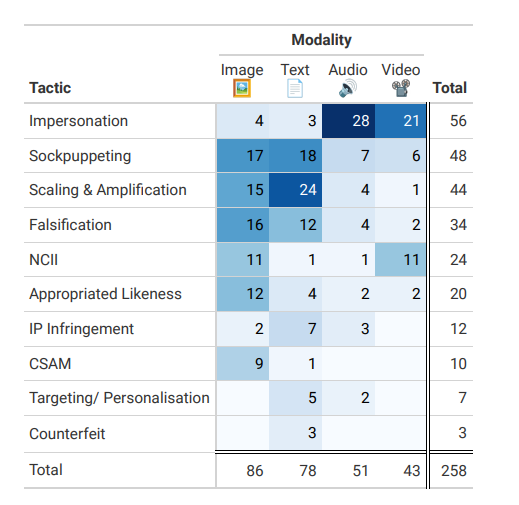

The study shows that most observed cases of GenAI misuse aim to exploit the systems’ capabilities rather than directly attack the models. The most common form of abuse involves manipulating representations of real people, such as identity theft, sock-puppet tactics, or generating non-consensual intimate images.

Other frequent tactics include spreading false information and using GenAI-powered bots and fake profiles to scale and amplify content.

Ad

The researchers found that most abuse cases are not technically sophisticated attacks on AI systems. Instead, they mainly exploit easily accessible GenAI features that require minimal technical expertise.

“The increased sophistication, availability and accessibility of GenAI tools seemingly introduces new and lower-level forms of misuse that are neither overtly malicious nor explicitly violate these tools’ terms of services, but still have concerning ethical ramifications,” the authors write.

The researchers cite new forms of political communication and advocacy that increasingly blur the lines between authenticity and deception as an example.

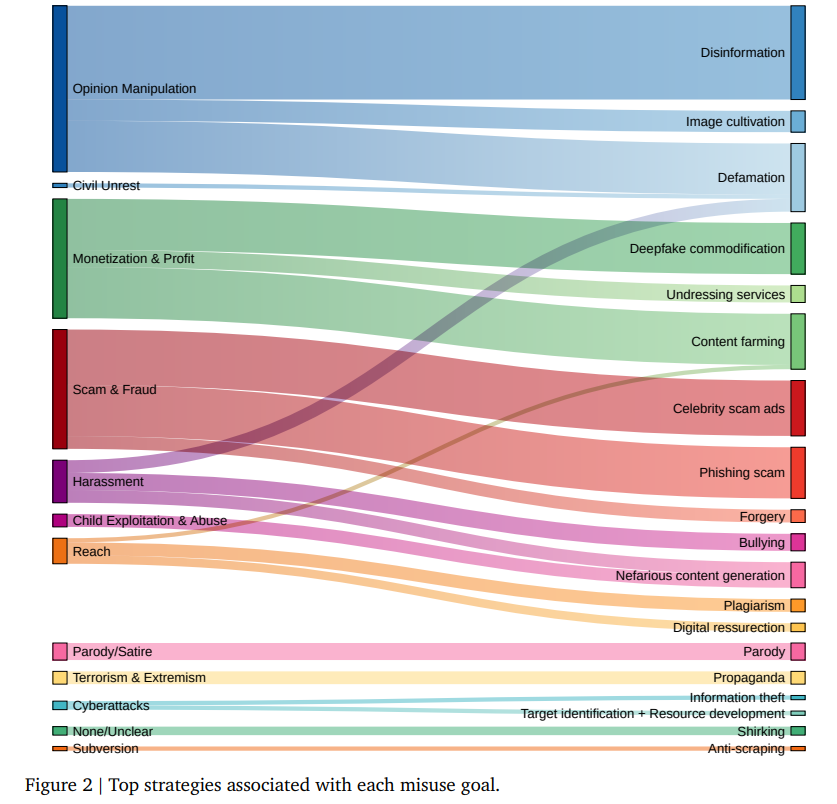

The study reveals that the most common motives behind GenAI misuse are influencing public opinion (27% of reported cases) and monetizing products and services (21%). Fraudulent activities, such as theft of information, money, or assets, rank third (18%).

Actors used various tactics to manipulate public opinion, including impersonating public figures, feigning grassroots support for or against a cause through synthetic digital personalities (“astroturfing”), and creating fake media.

Recommendation

Most cases in the dataset involved generating emotionally charged synthetic images on politically controversial topics such as war, social unrest, or economic decline. Others aimed to damage reputations through falsified compromising portrayals of politicians.

The misuse cases uncovered by Microsoft and OpenAI were also primarily within the political spectrum, but allegedly had little impact. OpenAI recently tightened access restrictions for China, Russia, and other countries.

AI-generated content is also produced on a large scale to generate advertising revenue. Fraudulent activities such as fake celebrity endorsements for crypto systems or personalized phishing campaigns are also common.

Attacks on AI systems still rare

According to the study, attacks on GenAI systems themselves were mostly carried out between 2023 and 2024 as part of research to uncover vulnerabilities. About a third of these attacks used “prompt injections” to manipulate models.

Explicit attacks on GenAI systems were still rare during this period. The researchers documented only two actual cases, which involved preventing unauthorized scraping of copyrighted material and allowing users to generate uncensored content.

The findings provide an understanding of the potential for harm from these technologies that regulators, trust and safety teams, and researchers can use to develop AI policies and countermeasures. With multimodal capabilities, new methods of abuse are likely to emerge, the researchers say.

To respond effectively to the rapidly changing threat landscape, the authors emphasize the need for a more comprehensive overview through data sharing between actors. They advocate for an industry-wide information exchange system similar to that used in the aviation industry.