AI companies make progress on AI transparency, but sensitive issues remain shrouded in secrecy

A study by Stanford University shows that AI developers have disclosed significantly more information about their models in the past six months. However, there is still significant room for improvement.

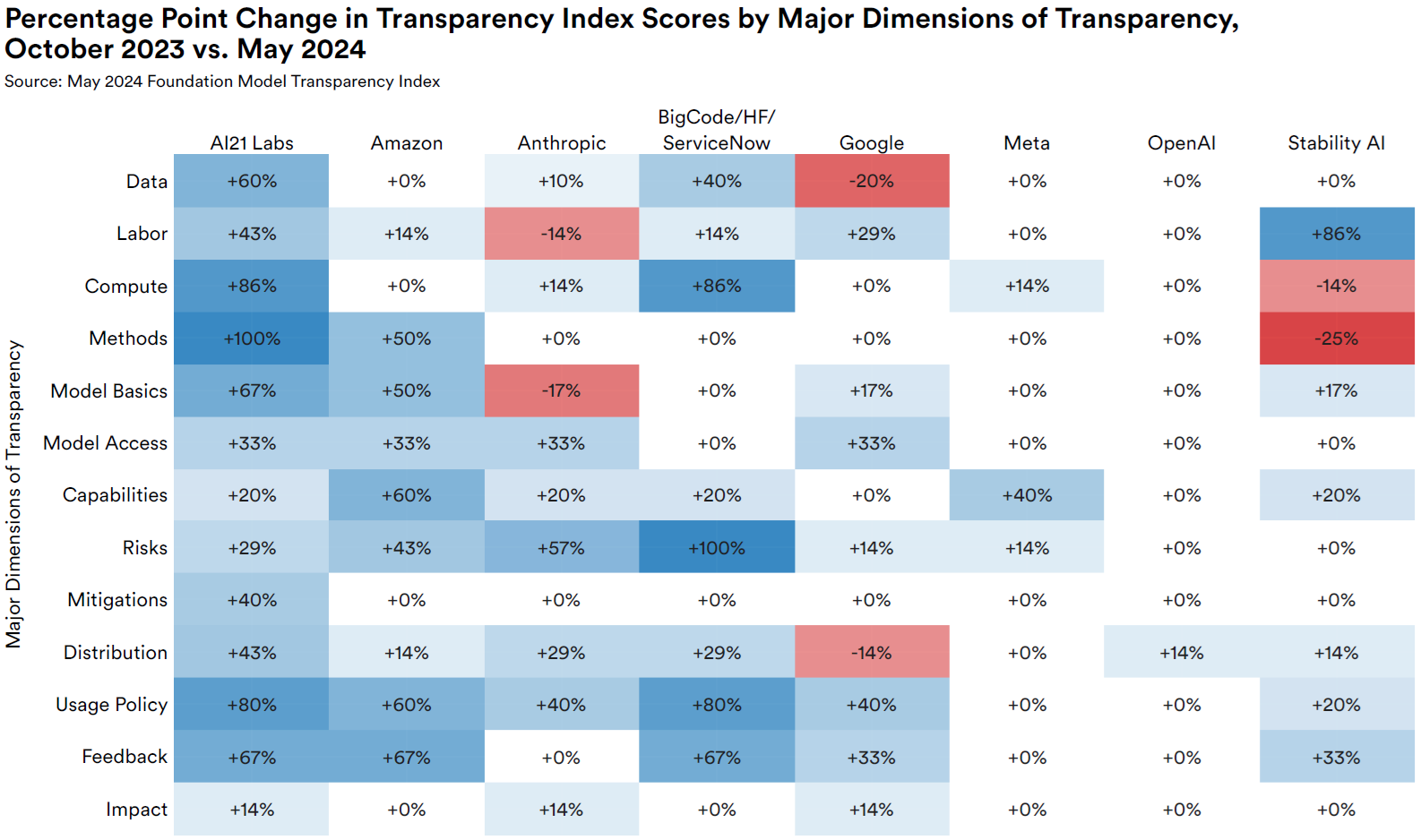

AI companies have become more transparent about their large language models over the past six months, according to a recent Stanford University study. The Foundation Model Transparency Index (FMTI) ranks companies based on 100 transparency indicators covering topics such as the data, computing power, capabilities, and risks of the models.

In October 2023, the average score of the evaluated companies was only 37 out of 100. The follow-up study published in May 2024 shows an increase to an average of 58 points.

Compared to October 2023, all eight companies evaluated in both studies have improved. Some improved dramatically, such as AI21 Labs, which went from 25 to 75 points. Others, like OpenAI, improved only slightly, from 48 to 49 points.

Ad

Ad

Overall, this is an improvement, but still far from genuine transparency. This is especially true because developers are most transparent about the capabilities of their models and the documentation for downstream applications. In contrast, sensitive issues such as training data, model reliability, and model impact remain very murky.

The study shows a systemic lack of transparency in some areas, where almost all developers withhold information, such as the copyright status of training data and the use of models in different countries and industries.

For 96 of the 100 indicators, at least one company scored one point, and for 89 indicators, even more. According to the researchers, great progress would be possible if all the companies in each category were to follow the most transparent ones.

For the current study, 14 developers, including eight from the previous study such as OpenAI and Anthropic and six new ones like IBM, submitted transparency reports. On average, they provided information on 16 additional indicators that were not previously publicly available.

A detailed evaluation of the individual models assessed is available on an interactive website.

Recommendation