AI agents in 2025 will be all about managing inflated expectations

The AI world is abuzz with predictions about agents in 2025. But some of the people laying the groundwork are more cautious about the timeline.

Logan Kilpatrick, who heads up Google AI Studio and manages the Gemini API, recently shared his thoughts on X about where AI is headed this year. He believes AI vision technology is already mature enough for widespread adoption, and 2025 will be the year it goes mainstream.

But when it comes to AI agents, Kilpatrick says they “still need a little more work” before they can handle billion-user scale deployment. He points out there’s typically a twelve-month gap between when an AI capability becomes technically possible and when it sees widespread adoption – putting meaningful agent deployment closer to 2026.

Microsoft’s AI CEO agrees it’s still early days

Mustafa Suleyman, Microsoft’s “CEO of AI,” shares this measured outlook. Speaking in June 2024, he explained that while AI models might handle specific, narrow tasks autonomously within two years, we’ll need two more generations of models before they work consistently well.

Ad

The challenge, he says, is getting models to match each user request with exactly the right function. Suleyman points out that today’s 80 percent accuracy isn’t good enough for reliable AI agents – users need 99 percent accuracy to trust them. Getting there would require about 100 times more computing power, something he thinks we won’t see until GPT-6.

Still, the major players aren’t sitting idly by. Google is pushing its agentic agenda forward with Gemini 2.0, and OpenAI reportedly plans to launch Operator in January, an AI agent that can handle tasks like browsing the Web.

But we’ve seen enough AI hype cycles to know there’s often a gap between what’s announced and what actually works. So it’s always worth asking whether these are meaningful advances or just stories to keep investors excited.

What makes something a real agent?

Anyone who regularly works with large language models and complex prompts knows why Kilpatrick and Suleyman are being relatively cautious. LLMs still struggle with reliability, especially when handling detailed, multi-step instructions.

But there’s a deeper issue: we can’t really evaluate predictions about agents until we agree on what an “agent” actually is.

Recommendation

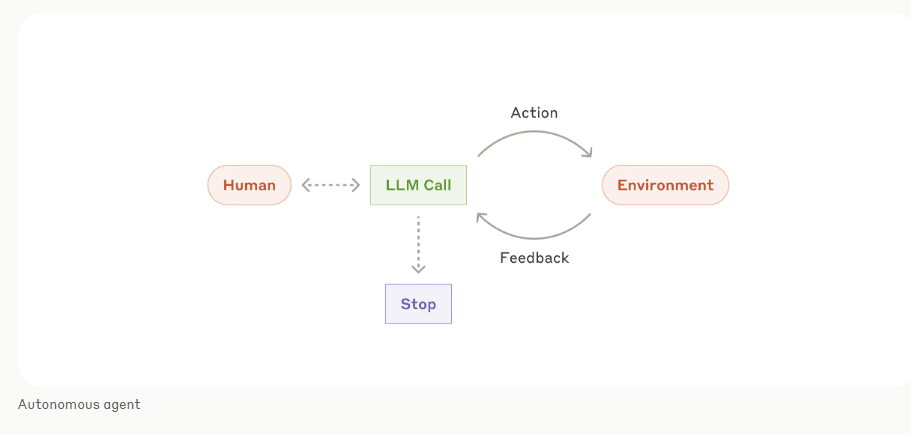

Anthropic offers a useful distinction between workflows and true agents. Workflows follow preset patterns, with language models and tools operating along fixed paths. True agents, on the other hand, control their processes and tools autonomously and dynamically. OpenAI defines agents similarly – as AI systems that can pursue complex goals with minimal direct oversight.

Many companies claiming to offer “agents” today are really just connecting prompts to each other or to tools like databases and web search. While these can be useful, it’s mostly marketing spin. More accurate terms would be “prompt chaining” or “assistants” – essentially pre-prompted chatbots with custom data access.

It’s telling that Anthropic, which recently released Computer Use (the first “next-gen” agent for computer tasks), actually advises companies to start simple with basic prompts and optimize from there. They argue that complex multi-agent systems only make sense when simpler solutions hit their limits.

Maybe they’re onto something. While companies rush to announce autonomous AI agents, most organizations are still figuring out how to use basic generative AI effectively. Before pursuing more complex AI systems, perhaps we should focus on implementing the tools we already have in meaningful ways.