A new study by NYU researchers supports Apple’s doubts about AI reasoning, but sees no dead end

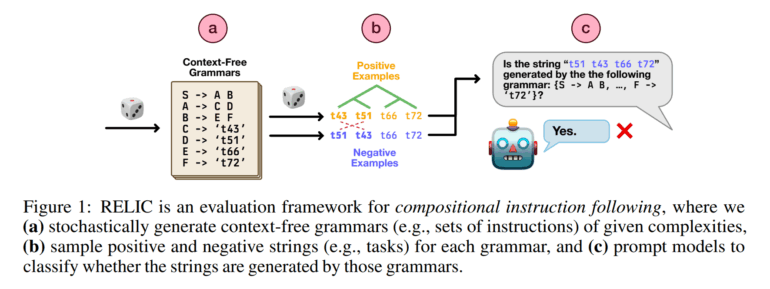

Researchers at New York University have introduced RELIC (Recognition of Languages In-Context), a new benchmark designed to test how well large language models can understand and follow complex, multi-step instructions. The team found results similar to those in a recent Apple paper, but say there is still room for improvement.

The RELIC test works by giving an AI a formal grammar – essentially a precise rule set that defines an artificial language – along with a string of symbols. The model must then decide whether the string is valid according to the grammar’s rules.

For example, a “sentence” (represented as S) is defined as “Part A” followed by “Part B” (S → A B). “Part A” itself consists of “Symbol C” and “Symbol D” (A → C D), continuing down to rules like “Symbol C becomes ‘t43′” (C → ‘t43’). The AI has to determine if a string like “t43 t51 t66 t72” can be generated from these rules. Importantly, the model gets no examples of correct or incorrect strings, and no prior training on the specific grammar – it has to apply the rules “zero-shot,” relying only on the description provided in context.

To succeed, the model must recognize and correctly apply many rules in the right, non-fixed order – sometimes repeatedly and in nested combinations. According to the researchers, this is similar to checking whether a computer program is written correctly or a sentence is grammatically sound. There are two types of grammar rules: those that break down abstract placeholders (non-terminals like S, A, B) into other placeholders (e.g., S → A B), and those that replace a placeholder with a concrete symbol (terminals like ‘t43’) (e.g., C → ‘t43’). RELIC can automatically generate an unlimited number of test cases with varying difficulty, preventing models from simply memorizing answers from known test data.

Ad

RELIC reveals a familiar pattern

The team tested eight different AI models, including OpenAI’s GPT-4.1 and o3, Google’s Gemma models, and DeepSeek-R1. For the study, they created the RELIC-500 dataset, which includes 200 unique grammars, each with up to 500 production rules, and test strings up to 50 symbols long. Even the most complex grammars in RELIC-500, the researchers note, are much simpler than those found in real programming or human languages.

Models generally performed well on simple grammars and short strings. But as the grammatical complexity or string length increased, accuracy dropped sharply – even for models designed for logical reasoning, like OpenAI’s o3 or DeepSeek-R1. One key finding: while models often appear to “know” the right approach – such as fully parsing a string by tracing each rule application – they don’t consistently put this knowledge into practice.

For simple tasks, models typically applied rules correctly. But as complexity grew, they shifted to shortcut heuristics instead of building the correct “derivation tree.” For example, models would sometimes guess that a string was correct just because it was especially long, or look only for individual symbols that appeared somewhere in the grammar rules, regardless of order – an approach that doesn’t actually check if the string fits the grammar.

Study finds “underthinking” on hard tasks

To probe the models’ reasoning strategies, the researchers used another AI – OpenAI’s o4-mini – as a sort of “AI judge” to evaluate the solutions. Human reviewers checked a sample of these judgments and found them to agree about 70% of the time, with o4-mini especially good at spotting shallow, shortcut solutions.

The analysis showed that on short, simple tasks, models tried to apply the rules step by step, building a logical “parse tree.” But when faced with longer or more complex examples, they defaulted to superficial heuristics.

Recommendation

A central problem identified by the study is the link between task complexity and the model’s “test-time compute” – the amount of computation, measured by the number of intermediate reasoning steps, the model uses during problem-solving. Theoretically, this workload should increase with input length. In practice, the researchers saw the opposite: with short strings (up to 6 symbols for GPT-4.1-mini, 12 for o3), models produced relatively many intermediate steps, but as tasks grew more complex, the number of steps dropped.

In other words, models truncate their reasoning before they have a real chance to analyze the structure. This “underthinking” on harder tasks closely matches what Apple researchers recently observed on LRMs, where reasoning activity actually decreased as difficulty increased.

Language models need more compute or smarter approaches

Both studies show that while today’s reasoning models can handle simple problems, they fundamentally break down on complex tasks – and paradoxically, “think” less, not more, as difficulty rises. RELIC goes beyond the game-like scenarios in the Apple study by testing a skill that matters in real-world AI: learning and applying new languages or rule systems using only the information provided in context.

RELIC also directly tests a more challenging form of contextual understanding than benchmarks like “needle-in-a-haystack” tests, where the goal is just to retrieve a single fact from a long passage. In RELIC, relevant rules are scattered throughout the text and must be combined in complex ways.

The researchers’ theoretical analysis suggests that future language models will need either much more computational power – e.g. more reasoning tokens at inference time – or fundamentally more efficient solution strategies to handle these problems. The ability to understand and carry out complex instructions, they argue, is essential for truly intelligent AI.

“If the current models are not able to do that, we need stronger models. It doesn’t mean LLMs don’t reason, or LRMs don’t reason, or deep learning is broken. Just that the ability of these models to ‘reason’ is currently limited, and we should try to improve it,” co-author Tal Linzen said.