Mistral’s new code model features a 32K context window for unmatched long code support

French AI company Mistral has launched Codestral, a new coding model that delivers high coding performance with less computational overhead than existing models.

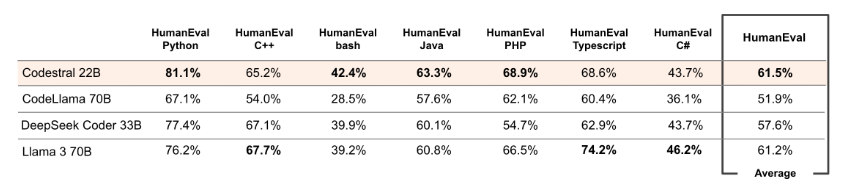

According to Mistral, Codestral handles more than 80 programming languages, including common ones such as Python, Java, C, C++, JavaScript, and Bash, as well as more specialized ones such as Swift and Fortran. Features include code completion, test writing, and filling in incomplete code with a fill-in-the-middle mechanism.

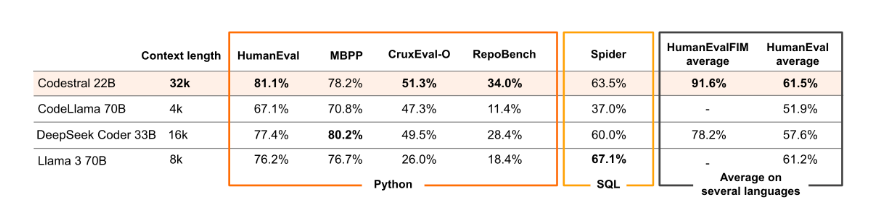

As a 22-billion-parameter model, Codestral sets a new standard for the performance/latency ratio of code generation compared to existing models, Mistral claims. With its larger context window of 32,000 tokens, Codestral outperforms all other models in RepoBench, a benchmark for longer code generation.

Mistral compared the performance of Codestral in various benchmarks for Python, SQL and other languages with competing models that have higher hardware needs. Codestral consistently performed better, e.g. in completing code repositories over long distances or predicting Python output.

Ad

Ad

Codestral is licensed as an open-weight model under the new Mistral AI Non-Production License, which allows it to be used for research and testing purposes. It can be downloaded from HuggingFace.

Mistral also provides two API endpoints for Codestral: codestral.mistral.ai for integration into IDEs, where developers bring their own API keys, and api.mistral.ai for research, batch queries or application development, where results are shown directly to users. The former is free for eight weeks, while the latter is charged per token.

Some early feedback from developers and researchers supports Codestral’s good performance. Despite its relatively small size, it delivers results similar to larger models.